- Docker - Home

- Docker - Overview

- Docker - Installing on Linux

- Docker - Installation

- Docker - Hub

- Docker - Images

- Docker - Containers

- Docker - Registries

- Docker - Compose

- Docker - Working With Containers

- Docker - Architecture

- Docker - Layers

- Docker - Container & Hosts

- Docker - Configuration

- Docker - Containers & Shells

- Docker - Dockerfile

- Docker - Building Files

- Docker - Public Repositories

- Docker - Managing Ports

- Docker - Web Server

- Docker - Commands

- Docker - Container Linking

- Docker - Data Storage

- Docker - Volumes

- Docker - Networking

- Docker - Security

- Docker - Toolbox

- Docker - Cloud

- Docker - Build Cloud

- Docker - Logging

- Docker - Continuous Integration

- Docker - Kubernetes Architecture

- Docker - Working of Kubernetes

- Docker - Generative AI

- Docker - Hosting

- Docker - Best Practices

- Docker - Setting Node.js

- Docker - Setting MongoDB

- Docker - Setting NGINX

- Docker - Setting ASP.Net

- Docker - Setting MySQL

- Docker - Setting Go

- Docker - Setting Rust

- Docker - Setting Apache

- Docker - Setting MariaDB

- Docker - Setting Jupyter

- Docker - Setting Portainer

- Docker - Setting Rstudio

- Docker - Setting Plex

- Docker Setting - Flame

- Docker Setting - PostgreSql

- Docker Setting - Mosquitto

- Docker Setting - Grafana

- Docker Setting - Nextcloud

- Docker Setting - Pawns

- Docker Setting - Ubuntu

- Docker Setting - RabbitMQ

- Docker - Setting Python

- Docker - Setting Java

- Docker - Setting Redis

- Docker - Setting Alpine

- Docker - Setting BusyBox

- Docker Setting - Traefik

- Docker Setting - WordPress

- Docker Useful Resources

- Docker - Quick Guide

- Docker - Useful Resources

- Docker - Discussion

Docker - Architecture

One of the most difficult tasks for DevOps and SRE teams is figuring out how to manage all application dependencies and technology stacks across many cloud and development environments. To do this, their processes often include keeping the application working regardless of where it runs, usually without changing much of its code.

Docker helps all engineers to be more efficient and decrease operational overheads, allowing any developer in any development environment to create robust and reliable apps. Docker is an open platform for building, shipping, and running software programs.

Docker allows you to decouple your applications from your infrastructure, making it possible to release software quickly. Docker allows you to manage your infrastructure in the same manner you do your applications. Using Docker's methodology for shipping, testing, and deploying code can drastically cut the time between producing code and operating it in production.

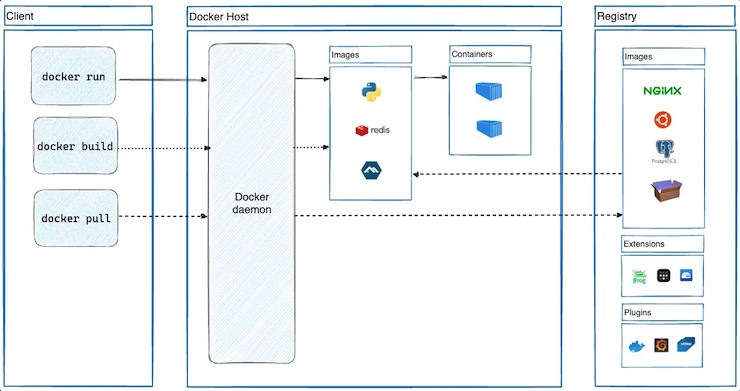

Docker uses a client-server architecture. The Docker client communicates with the docker daemon, which does the heavy work of creation, execution, and distribution of docker containers. The Docker client operates alongside the daemon on the same host, or we can link the Docker client and daemon remotely. The docker client and daemon communicate via REST API over a UNIX socket or a network.

In this chapter, let's discuss the Docker architecture in detail.

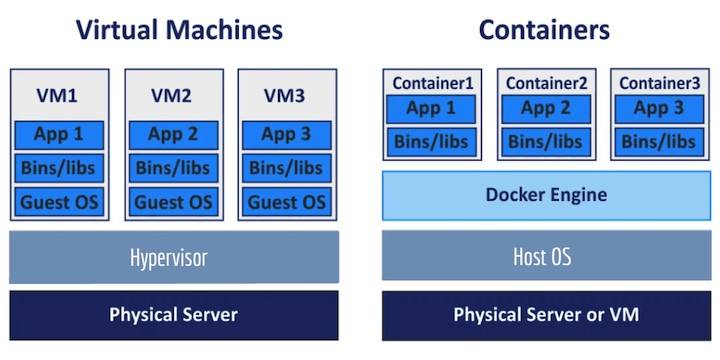

Difference between Containers and Virtual Machines

A Virtual Machine (VM) exists to accomplish tasks that would be risky if performed directly on the host environment. VMs are segregated from the rest of the system, so the software within the virtual machine cannot interfere with the host computer.

A virtual machine is a computer file or software, commonly referred to as a guest, or an image produced within a computing environment known as the host.

A virtual machine may execute apps and programs as if they were on a separate computer, making it excellent for testing other operating systems such as beta versions, creating operating system backups, and installing software and applications. A host can have multiple virtual machines running at the same time.

A virtual machine contains several essential files including a logfile, an NVRAM setting file, a virtual disk file, and a configuration file.

Server virtualization is another area where virtual machines can be extremely useful. Server virtualization divides a physical server into numerous isolated and unique servers, allowing each to execute its operating system independently. Each virtual machine has its virtual hardware, including CPUs, RAM, network ports, hard drives, and other components.

On the other hand, Docker is a software development tool and virtualization technology that allows you to easily create, deploy, and manage programs utilizing containers. A container is a lightweight, standalone executable bundle of software that includes all of the libraries, configuration files, dependencies, and other components required to run the application.

In other words, programs execute the same way regardless of where they are or what computer they are running on since the container offers an environment for the application throughout its software development life cycle.

Because containers are separated, they offer security, allowing numerous containers to run concurrently on the same host. Furthermore, containers are lightweight because they do not require the additional load of a hypervisor. A hypervisor is a guest operating system similar to VMware or VirtualBox, but containers run directly within the host machine kernel.

Should I Choose Docker or a Virtual Machine (VM)?

It would be unfair to compare Docker and virtual machines because they are intended for different purposes. Docker is undoubtedly gaining popularity, but it cannot be considered to be a replacement for virtual machines. Despite Docker's popularity, a virtual machine is a superior option in some circumstances.

Virtual machines are preferred over Docker containers in a production environment because they run their operating system and pose no threat to the host computer. However, for testing purposes, Docker is the best option because it gives several OS platforms for the complete testing of software or applications.

Additionally, a Docker container also employs a Docker engine rather than a hypervisor, like in a virtual machine. Since the host kernel is not shared, employing docker-engine makes containers compact, isolated, compatible, high-performance-intensive, and quick to respond.

Docker containers offer little overhead since they can share a single kernel and application libraries. Organizations primarily adopt the hybrid method since the decision between virtual machines and Docker containers is determined by the type of workload delivered.

Furthermore, only a few digital business organizations rely on virtual machines as their primary choice, opting to use containers because deployment is time-consuming, and running microservices is one of the biggest obstacles it faces. However, some businesses prefer virtual machines to Dockers, primarily those who want enterprise-grade security for their infrastructure.

Components of Docker Architecture

The key components of a Docker architecture are: the Docker Engine, the Docker Registries, and the Docker Objects (Images, Containers, Network, Storage)

Lets discuss each of them to get a better understanding of how different components of the Docker architecture interact with each other.

Docker Engine

Docker Engine is the foundation of the Docker platform, facilitating all elements of the container lifecycle. It consists of three basic components: a command-line interface, a REST API, and a daemon (which handles the job).

The Docker daemon, commonly known as 'dockerd', continually listens to Docker API requests. It is used to perform all of the heavy activities, such as creating and managing Docker objects like containers, volumes, images, and networks. A Docker daemon can communicate with other daemons on the same or separate host machines. For example, in a swarm cluster, the host machine's daemon can connect with daemons on other nodes to complete tasks.

The Docker API allows applications to control the Docker Engine. They can use it to look up details on containers or images, manage or upload images, and take actions such as creating new containers. This function can be attained using the HTTP client web service.

Docker Registries

Docker registries are storage facilities or services that enable you to store and retrieve images as needed. Registries, for example, are made up of Docker repositories that keep all of your images under one roof.

There are two major components to public registries: Docker Hub and Docker Cloud. Private registries are also popular in organizations. The most popular commands for working with these storage areas are docker push, docker pull, and docker run.

Dockerhub, the official Docker registry, contains multiple official image repositories. A repository is a collection of similar Docker images that are uniquely identifiable by Docker tags. Dockerhub offers a wealth of relevant official and vendor-specific images to its users. Some of them are Nginx, Apache, Python, Java, Mongo, Node, MySQL, Ubuntu, Fedora, Centos, and so on.

You may also set up your private repository on Dockerhub and use the Docker push command to store your custom Docker images. Docker allows you to construct your own private Docker registry on your local machine. Once you've launched a container with the registry image, you may use the Docker push command to push images to this private registry.

Docker Objects

When you use Docker, you create and manage images, containers, networks, volumes, plugins, and other items. This section provides a quick summary of a few of those things.

Docker Images

An image is a read-only template that includes instructions for building a Docker container. In many cases, an image is built on another image, with minor modifications. For example, you could create an image that is based on the Ubuntu image but includes the Apache web server, your application, and the configuration information required to execute your application.

You may produce your images or utilize those created by others and published in a registry. To construct your image, use a Dockerfile with a simple syntax to define the actions required to generate and execute the image. Each instruction in a Dockerfile generates a layer within the image. When you edit the Dockerfile and rebuild the image, only the altered layers are rebuilt. This is one of the reasons why images are so lightweight, tiny, and fast compared to other virtualization approaches.

You can use a Docker build command to create a Docker Image from a Dockerfile.

Here, "-t" assigns a tag to the image. The dot at the end specifies the build context where the Dockerfile is located which is the current directory in this case.

$ docker build -t myimage .

Docker Containers

A container is a running instance of an image. A container can be created, started, stopped, moved, or deleted using the Docker API or CLI. You can link a container to one or more networks, attach storage to it, or even construct a new image from its existing state.

A container is often effectively segregated from other containers and the host machine. You have control over how isolated a container's network, storage, and other underlying subsystems are from other containers and the host machine.

A container is defined by its image as well as any configuration parameters you specify when creating or starting it. When a container is removed, any changes to its state that aren't persistent disappear.

Containers have full access to the resources that you define in the Dockerfile when generating an image. Such setups include build context, network connections, storage, CPU, memory, ports, and so on. For example, if you want access to a container with Java libraries installed, you can use the Dockerhub Java image and the Docker run command to start a container connected with it.

You can use a Docker run command to create a container. Here, the -it flags starts the container in interactive mode and associates a pseudo-TTY to it. The /bin/bash specifies the command to be run when the container starts. This will allow you to access the bash of the container.

$ docker run -i -t ubuntu /bin/bash

Docker Networking

Docker networking is a means of communicating between all isolated containers. There are primarily four network drivers in Docker −

Bridge

This is the default network for containers that can't communicate with the outside world. You utilize this network when your application runs on standalone containers, which are numerous containers connected in a network that only allows them to communicate with one another and not with the outside world.

Host

This driver enables Docker to work seamlessly with resources on your local machine. It uses your machine's native network capabilities to enable low-level IP tunneling and data link layer encryption across Docker apps on various endpoints.

Overlay Network

This is a software-defined networking technique that enables containers to connect. To link it to an external host, first construct a virtual bridge on one host, followed by an overlay network. You'll also need to set up the overlay network and allow access from one side to the other. A "none" driver usually indicates that the network is unplugged.

macvlan

The macvlan driver can be used to assign addresses to containers and have them behave similarly to physical devices. What distinguishes this is that it directs communication between containers using their MAC addresses rather than IPs. Use this network when you want the containers to look like physical devices, such as when migrating a VM.

Docker Storage

There are several alternatives for safely storing data. For example, you can store data on a container's writable layer and use storage drivers. The problem with this technique is that if you switch off or stop the container, you will lose your data unless you have it saved somewhere else.

When it comes to persistent storage in Docker containers, there are four alternatives −

Data volumes

They allow you to establish persistent storage, rename volumes, list volumes, and view the container associated with the volume. Data volumes are placed on data storage outside the container's copy-on-right mechanism, such as S3 or Azure.

Volume Container

A Volume Container is an alternate solution in which a dedicated container hosts a volume, which can then be mounted or symlinked to other containers. The volume container in this approach is independent of the application container, allowing it to be shared across numerous containers.

Directory Mounts

A third option is to mount a host's local directory within the container. In earlier circumstances, the volumes had to be in the Docker volumes folder, whereas directory mounts might be from any directory on the Host computer.

Storage Plugins

Storage plugins allow Docker to connect to external storage sources. These plugins enable Docker to work with a storage array or device by mapping the host's drive to an external source. One example is a plugin that allows you to use your Docker installation's GlusterFS storage and map it to a readily accessible location.

Conclusion

To summarize, Docker transforms the way developers and IT operations handle application dependencies and deployment processes across multiple environments.

Docker's client-server architecture effectively decouples applications from their underlying infrastructure, easing the process of developing, shipping, and executing software. This method dramatically minimizes the time and effort necessary to transition from code development to production, improving the agility and scalability of software development processes.

Docker containers' lightweight nature, paired with their isolation features, creates a stable and consistent environment for application execution, regardless of the host system.

Ultimately, Docker's comprehensive architecture, which includes components such as Docker Engine, registries, images, containers, networking, and storage, provides enterprises with the tools they need to maintain high-performance, dependable, and secure application environments.

While Docker and virtual machines have various uses and characteristics, Docker's containerization technology is ideal for modern, cloud-native apps and microservices architectures. Organizations that use Docker in their DevOps operations can improve the efficiency, flexibility, and speed with which they build and deploy software.

FAQ

Q 1. What are the key components of Docker architecture?

The Docker architecture is made up of multiple critical components. The Docker Engine consists of the Docker daemon, REST API, and command-line interface (CLI), which all work together to manage container lifecycles. Docker images are read-only templates used to generate containers that contain the program and its dependencies.

Containers are running instances of images that serve as isolated environments for applications. Docker registries, such as Docker Hub, store and distribute images, making them readily available.

Docker networking enables containers to connect with one another and with other systems, while storage solutions such as volumes ensure data persistence beyond the lifecycle of the containers.

Q 2. Can Docker be used for both development and production environments?

Yes, Docker is designed to be used in development, testing, and production settings, ensuring a consistent environment for applications at all phases. Docker enables developers to create isolated containers that replicate the production environment, ensuring that programs execute consistently.

During testing, containers can be used to establish repeatable test environments, which speeds up the process and reduces issues caused by environmental variances. Docker's orchestration technologies, such as Docker Swarm and Kubernetes, improve its readiness for production by handling container deployment, scaling, and management.