- Generative AI - Home

- Generative AI Basics

- Generative AI Basics

- Generative AI Evolution

- ML and Generative AI

- Generative AI Models

- Discriminative vs Generative Models

- Types of Gen AI Models

- Probability Distribution

- Probability Density Functions

- Maximum Likelihood Estimation

- Generative AI Networks

- How GANs Work?

- GAN - Architecture

- Conditional GANs

- StyleGAN and CycleGAN

- Training a GAN

- GAN Applications

- Generative AI Transformer

- Transformers in Gen AI

- Architecture of Transformers in Gen AI

- Input Embeddings in Transformers

- Multi-Head Attention

- Positional Encoding

- Feed Forward Neural Network

- Residual Connections in Transformers

- Generative AI Autoencoders

- Autoencoders in Gen AI

- Autoencoders Types and Applications

- Implement Autoencoders Using Python

- Variational Autoencoders

- Generative AI and ChatGPT

- A Generative AI Model

- Generative AI Miscellaneous

- Gen AI for Manufacturing

- Gen AI for Developers

- Gen AI for Cybersecurity

- Gen AI for Software Testing

- Gen AI for Marketing

- Gen AI for Educators

- Gen AI for Healthcare

- Gen AI for Students

- Gen AI for Industry

- Gen AI for Movies

- Gen AI for Music

- Gen AI for Cooking

- Gen AI for Media

- Gen AI for Communications

- Gen AI for Photography

CycleGAN and StyleGAN

Read this chapter to understand CycleGAN and StyleGAN and how they stand out for their remarkable capabilities in generating and transforming images.

What is Cycle Generative Adversarial Network?

CycleGAN, or in short Cycle-Consistent Generative Adversarial Network, is a kind of GAN framework that is designed to transfer the characteristics of one image to another. In other words, CycleGAN is designed for unpaired image-to-image translation tasks where there is no relation between the input and output images.

In contrast to traditional GANs that require paired training data, CycleGAN can learn mappings between two different domains without any supervision.

How does a CycleGAN Work?

The working of CycleGAN lies in the fact that it treats the problem as an image reconstruction problem. Lets understand how it works −

- The CycleGAN first takes an image input, say, "X". It then uses generator, say, "G" to convert input image into the reconstructed image.

- Once reconstruction is done, it reverses the process of reconstructed image to the original image with the help of another generator, say, "F".

Architecture of CycleGAN

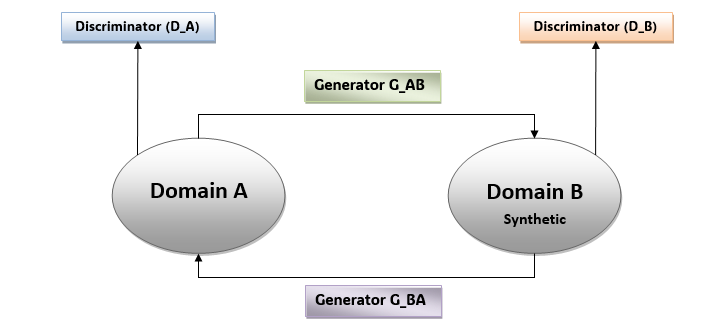

Like a traditional GAN, CycleGAN also has two parts-a generator and a Discriminator. But along with these two components, CycleGAN introduces the concept of cycle consistency. Lets understand these components of CycleGAN in detail −

Generator Networks (G_AB and G_BA)

CycleGAN have two generator networks say, G_AB and G_BA. These generators translate images from domain A to domain B and vice versa. They are responsible for minimizing the reconstruction error between the original and translated images.

Discriminator Networks (D_A and D_B)

CycleGAN have two discriminator networks say, D_A and D_B. These discriminators distinguish between real and translated images in domain A and B respectively. They are responsible for improving the realism of the generated images using adversarial loss.

Cycle Consistency Loss

CycleGAN introduces a third component called Cycle Consistency Loss. It enforces consistency between the real and translated images across both domains A and B. With the help of Cycle Consistency Loss, the generators learn meaningful mappings between both the domains and ensure realism of the generated images.

Given below is the schematic diagram of a CycleGAN −

Applications of CycleGAN

CycleGAN finds its applications in various image-to-image translation tasks, including the following −

- Style Transfer − CycleGAN can be used for transferring the style of images between different domains. It includes converting photos to paintings, day scenes to night scenes, and aerial photos to maps, etc.

- Domain Adaptation − CycleGAN can be used for adapting models trained on synthetic data to real-world data. It increases generalization and performance in various tasks like object detection and semantic segmentation.

- Image Enhancement − CycleGAN can be used for enhancing the quality of images by removing artifacts, adjusting colors, and improving visual aesthetics.

What is Style Generative Adversarial Network?

StyleGAN, or in short Style Generative Adversarial Network, is a kind of GAN framework developed by NVIDIA. StyleGAN is specifically designed for generating photo-realistic high-quality images.

In contrast to traditional GANs, StyleGAN introduced some innovative techniques for improved image synthesis and have some better control over specific attributes.

Architecture of StyleGAN

StyleGAN uses the traditional progressive GAN architecture and along with that, it proposed some modifications in its generator part. The discriminator part is almost like the traditional progressive GAN. Lets understand how the StyleGAN architecture is different −

Progressive Growing

In comparison with traditional GAN, StyleGAN uses a progressive growing strategy with the help of which the generator and discriminator networks are gradually increased in size and complexity during training. This Progressive growing allows StyleGAN to generate images of higher resolution (up to 1024x1024 pixels).

Mapping Network

To control the style and appearance of the generated images, StyleGAN uses a mapping network. This mapping network converts the input latent space vectors into intermediate latent vectors.

Synthesis Network

StyleGAN also incorporates a synthesis network that takes the intermediate latent vectors produced by the mapping network and generates the final image output. The synthesis network, consisting of a series of convolutional layers with adaptive instance normalization, enables the model to generate high-quality images with small details.

Style Mixing Regularization

StyleGAN also introduces style mixing regularization during training that allows the model to combine different styles from multiple latent vectors. The advantage of style mixing regularization is that it enhances the realism of the generated output images.

Applications of StyleGAN

StyleGAN finds its application in various domains, including the following −

Artistic Rendering

Due to having some better control over specific attributes like age, gender, and facial expression, StyleGAN can be used to create realistic portraits, artwork, and other kinds of images.

Fashion and Design

StyleGAN can be used to generate diverse clothing designs, textures, and styles. This feature makes StyleGAN a valuable model in fashion design and virtual try-on applications.

Face Morphing

StyleGAN provides us with smooth morphing between different facial attributes. This feature makes StyleGAN useful for applications like age progression, gender transformation, and facial expression transfer.

Conclusion

In this chapter, we explained two diverse variants of the traditional Generative Adversarial Networks, namely CycleGAN and StyleGAN.

While CycleGAN is designed for unpaired image-to-image translation tasks where there is no relation between the input and output images, StyleGAN is specifically designed for generating photo-realistic high-quality images.

Understanding the architectures and innovations behind both CycleGAN and StyleGAN provides us with an insight into their potential to create realistic output images.