- Generative AI - Home

- Generative AI Basics

- Generative AI Basics

- Generative AI Evolution

- ML and Generative AI

- Generative AI Models

- Discriminative vs Generative Models

- Types of Gen AI Models

- Probability Distribution

- Probability Density Functions

- Maximum Likelihood Estimation

- Generative AI Networks

- How GANs Work?

- GAN - Architecture

- Conditional GANs

- StyleGAN and CycleGAN

- Training a GAN

- GAN Applications

- Generative AI Transformer

- Transformers in Gen AI

- Architecture of Transformers in Gen AI

- Input Embeddings in Transformers

- Multi-Head Attention

- Positional Encoding

- Feed Forward Neural Network

- Residual Connections in Transformers

- Generative AI Autoencoders

- Autoencoders in Gen AI

- Autoencoders Types and Applications

- Implement Autoencoders Using Python

- Variational Autoencoders

- Generative AI and ChatGPT

- A Generative AI Model

- Generative AI Miscellaneous

- Gen AI for Manufacturing

- Gen AI for Developers

- Gen AI for Cybersecurity

- Gen AI for Software Testing

- Gen AI for Marketing

- Gen AI for Educators

- Gen AI for Healthcare

- Gen AI for Students

- Gen AI for Industry

- Gen AI for Movies

- Gen AI for Music

- Gen AI for Cooking

- Gen AI for Media

- Gen AI for Communications

- Gen AI for Photography

Conditional Generative Adversarial Networks (cGAN)

What is a Conditional GAN?

A Generative Adversarial Network (GAN) is a deep learning framework that can generate new random plausible examples for a given dataset. Conditional GAN (cGAN) extends the GAN framework by including the condition information like class labels, attributes, or even other data samples, into both the generator and the discriminator networks.

With the help of these conditioning information, Conditional GANs provide us the control over the characteristic of the generated output.

Read this chapter to understand the concept of Conditional GANs, their architecture, applications, and challenges.

Where do We Need a Conditional GAN?

While working with GANs, there may arise a situation where we want it to generate specific types of images. For example, to produce fake pictures of a dog, you train your GAN with a broad spectrum of dog images. While we can use our trained model to generate an image of a random dog, we cannot instruct it to generate an image of, say, a Dalmatian dog or a Rottweiler.

To produce fake pictures of a dog with Conditional GAN, during training, we pass the images to the network with their actual labels (Dalmatian dog, Rottweiler, Pug, etc.) for the model to learn the difference between these dogs. In this way, we can make our model able to generate images of specific dog breeds.

A Conditional GAN is an extension of the traditional GAN architecture that allows us to generate images by conditioning the network with additional information.

Architecture of Conditional GANs

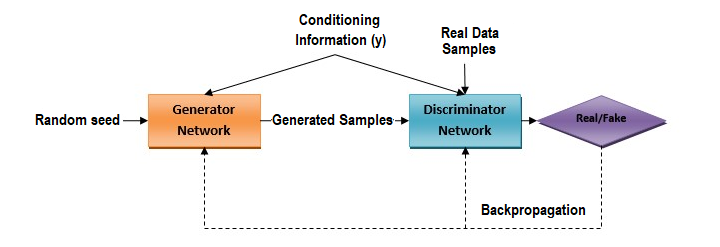

Like traditional GANs, the architecture of a Conditional GAN consists of two main components: a generator network and a discriminative network.

The only difference is that in Conditional GANs, both the generator network and discriminative network receive additional conditioning information y along with their respective inputs. Lets understand it with the help of this diagram −

The Generator Network

The generator networks, as shown in the above diagram, takes two inputs: a random noise vector which is sampled from a predefined distribution and the conditioning information "y". It now transforms it into synthetic data samples. Once transformed, the goal of the generator is to not only produce data that is identical to real data but also align with the provided conditional information.

The Discriminator Network

The discriminator network receives both real data samples and fake samples generated by the generator, along with the conditioning information "y".

The goal of the discriminator network is to evaluate the input data and tries to distinguish between real data samples from the dataset and fake data samples generated by the generator model while considering the provided conditioning information.

We have seen the use of conditioning information in cGAN architecture. Lets understand conditional information and its types.

Conditional Information

Conditional information often denoted by "y" is an additional information which is provided to both generator network and discriminator network to condition the generation process. Based on the application and the required control over the generated output, conditional information can take various forms.

Types of Conditional Information

Some of the common types of conditional information are as follows −

- Class Labels − In image classification tasks, conditional information "y" may represent the class labels corresponding to different categories. For example, in handwritten digits dataset, "y" could indicate the digit class (0-9) that the generator network should produce.

- Attributes − In image generation tasks, conditional information "y" may represent specific attributes or features of the desired output, such as the color of objects, the style of clothing, or the pose of a person.

- Textual Descriptions − For text-to-image synthesis tasks, conditional information "y" may consist of textual descriptions or captions describing the desired characteristics of the generated image.

Applications of Conditional GANs

Listed below are some of the fields where Conditional GANs find its applications −

Image-to-Image Translation

Conditional GANs are best suited for tasks like translating images from one domain to another. Translating images includes converting satellite images to maps, transforming sketches into realistic images, or converting day-time scenes to night-time scenes etc.

Semantic Image Synthesis

Conditional GANs can condition on semantic labels, hence they can generate realistic images based on textual descriptions or semantic layouts.

Super-Resolution and Inpainting

Conditional GANs can also be used for image super-resolution tasks in which low-resolution images are transformed into similar high-resolution images. They can also be used for inpainting tasks in which, based on contextual information, missing parts of an image are filled in.

Style Transfer and Editing

Conditional GANs allow us to manipulate specific attributes like color, texture, or artistic style while preserving other aspects of the image.

Challenges in using Conditional GANs

Conditional GANs offer significant advancements in generative modeling but they also have some challenges. Lets see which kind of challenges you can face while using Conditional GANs −

Mode Collapse

Like traditional GANs, Conditional GANs can also experience mode collapse. In mode collapse, the generator learns to produce limited varieties of samples and fails to capture the entire data distribution.

Conditioning Information Quality

The effectiveness of Conditional GANs depends on the quality and relevance of the provided conditioning information. Noisy or irrelevant conditioning information can lead to poor generation outputs.

Training Instability

The training instability issues observed in traditional GANs can also be faced by Conditioning GANs. To avoid this, CGANs require careful architecture design and training approaches.

Scalability

With the increased complexity of conditioning information, it becomes difficult to handle Conditional GANs. It then requires more computational resources.

Conclusion

Conditional GAN (cGAN) extends the GAN framework by including the condition information like class labels, attributes, or even other data samples. Conditional GANs provide us the control over the characteristics of the generated output.

From image-to-image translation to semantic image synthesis, Conditional GANs find their applications across various domains.