- Beautiful Soup - Home

- Beautiful Soup - Overview

- Beautiful Soup - Web Scraping

- Beautiful Soup - Installation

- Beautiful Soup - Souping the Page

- Beautiful Soup - Kinds of objects

- Beautiful Soup - Inspect Data Source

- Beautiful Soup - Scrape HTML Content

- Beautiful Soup - Navigating by Tags

- Beautiful Soup - Find Elements by ID

- Beautiful Soup - Find Elements by Class

- Beautiful Soup - Find Elements by Attribute

- Beautiful Soup - Searching the Tree

- Beautiful Soup - Modifying the Tree

- Beautiful Soup - Parsing a Section of a Document

- Beautiful Soup - Find all Children of an Element

- Beautiful Soup - Find Element using CSS Selectors

- Beautiful Soup - Find all Comments

- Beautiful Soup - Scraping List from HTML

- Beautiful Soup - Scraping Paragraphs from HTML

- BeautifulSoup - Scraping Link from HTML

- Beautiful Soup - Get all HTML Tags

- Beautiful Soup - Get Text Inside Tag

- Beautiful Soup - Find all Headings

- Beautiful Soup - Extract Title Tag

- Beautiful Soup - Extract Email IDs

- Beautiful Soup - Scrape Nested Tags

- Beautiful Soup - Parsing Tables

- Beautiful Soup - Selecting nth Child

- Beautiful Soup - Search by text inside a Tag

- Beautiful Soup - Remove HTML Tags

- Beautiful Soup - Remove all Styles

- Beautiful Soup - Remove all Scripts

- Beautiful Soup - Remove Empty Tags

- Beautiful Soup - Remove Child Elements

- Beautiful Soup - find vs find_all

- Beautiful Soup - Specifying the Parser

- Beautiful Soup - Comparing Objects

- Beautiful Soup - Copying Objects

- Beautiful Soup - Get Tag Position

- Beautiful Soup - Encoding

- Beautiful Soup - Output Formatting

- Beautiful Soup - Pretty Printing

- Beautiful Soup - NavigableString Class

- Beautiful Soup - Convert Object to String

- Beautiful Soup - Convert HTML to Text

- Beautiful Soup - Parsing XML

- Beautiful Soup - Error Handling

- Beautiful Soup - Trouble Shooting

- Beautiful Soup - Porting Old Code

Beautiful Soup - Functions Reference

- Beautiful Soup - contents Property

- Beautiful Soup - children Property

- Beautiful Soup - string Property

- Beautiful Soup - strings Property

- Beautiful Soup - stripped_strings Property

- Beautiful Soup - descendants Property

- Beautiful Soup - parent Property

- Beautiful Soup - parents Property

- Beautiful Soup - next_sibling Property

- Beautiful Soup - previous_sibling Property

- Beautiful Soup - next_siblings Property

- Beautiful Soup - previous_siblings Property

- Beautiful Soup - next_element Property

- Beautiful Soup - previous_element Property

- Beautiful Soup - next_elements Property

- Beautiful Soup - previous_elements Property

- Beautiful Soup - find Method

- Beautiful Soup - find_all Method

- Beautiful Soup - find_parents Method

- Beautiful Soup - find_parent Method

- Beautiful Soup - find_next_siblings Method

- Beautiful Soup - find_next_sibling Method

- Beautiful Soup - find_previous_siblings Method

- Beautiful Soup - find_previous_sibling Method

- Beautiful Soup - find_all_next Method

- Beautiful Soup - find_next Method

- Beautiful Soup - find_all_previous Method

- Beautiful Soup - find_previous Method

- Beautiful Soup - select Method

- Beautiful Soup - append Method

- Beautiful Soup - extend Method

- Beautiful Soup - NavigableString Method

- Beautiful Soup - new_tag Method

- Beautiful Soup - insert Method

- Beautiful Soup - insert_before Method

- Beautiful Soup - insert_after Method

- Beautiful Soup - clear Method

- Beautiful Soup - extract Method

- Beautiful Soup - decompose Method

- Beautiful Soup - replace_with Method

- Beautiful Soup - wrap Method

- Beautiful Soup - unwrap Method

- Beautiful Soup - smooth Method

- Beautiful Soup - prettify Method

- Beautiful Soup - encode Method

- Beautiful Soup - decode Method

- Beautiful Soup - get_text Method

- Beautiful Soup - diagnose Method

Beautiful Soup Useful Resources

Beautiful Soup - Quick Guide

Beautiful Soup - Overview

In today's world, we have tons of unstructured data/information (mostly web data) available freely. Sometimes the freely available data is easy to read and sometimes not. No matter how your data is available, web scraping is very useful tool to transform unstructured data into structured data that is easier to read and analyze. In other words, web scraping is a way to collect, organize and analyze this enormous amount of data. So let us first understand what is web-scraping.

Introduction to Beautiful Soup

The Beautiful Soup is a python library which is named after a Lewis Carroll poem of the same name in "Alice's Adventures in the Wonderland". Beautiful Soup is a python package and as the name suggests, parses the unwanted data and helps to organize and format the messy web data by fixing bad HTML and present to us in an easily-traversable XML structures.

In short, Beautiful Soup is a python package which allows us to pull data out of HTML and XML documents.

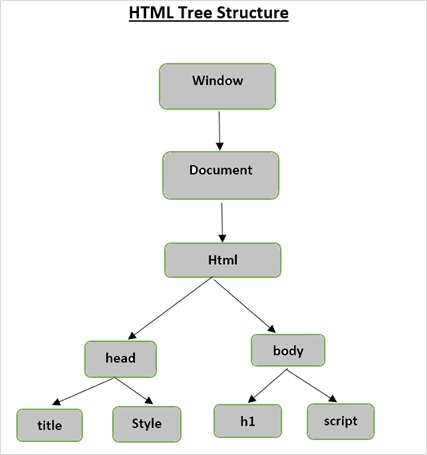

HTML tree Structure

Before we look into the functionality provided by Beautiful Soup, let us first understand the HTML tree structure.

The root element in the document tree is the html, which can have parents, children and siblings and this determines by its position in the tree structure. To move among HTML elements, attributes and text, you have to move among nodes in your tree structure.

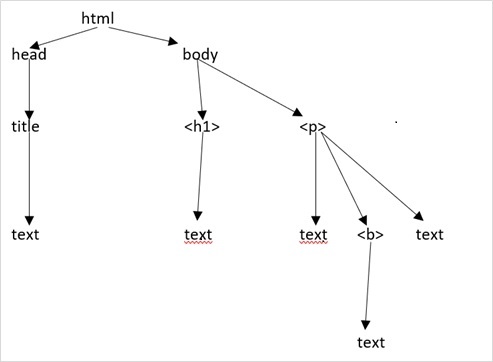

Let us suppose the webpage is as shown below −

Which translates to an html document as follows −

<html>

<head>

<title>TutorialsPoint</title>

</head>

<body>

<h1>Tutorialspoint Online Library</h1>

<p><b>It's all Free</b></p>

</body>

</html>

Which simply means, for above html document, we have a html tree structure as follows −

Beautiful Soup - Web Scraping

Scraping is simply a process of extracting (from various means), copying and screening of data.

When we scrape or extract data or feeds from the web (like from web-pages or websites), it is termed as web-scraping.

So, web scraping (which is also known as web data extraction or web harvesting) is the extraction of data from web. In short, web scraping provides a way to the developers to collect and analyze data from the internet.

Why Web-scraping?

Web-scraping provides one of the great tools to automate most of the things a human does while browsing. Web-scraping is used in an enterprise in a variety of ways −

Data for Research

Smart analyst (like researcher or journalist) uses web scrapper instead of manually collecting and cleaning data from the websites.

Products, prices & popularity comparison

Currently there are couple of services which use web scrappers to collect data from numerous online sites and use it to compare products popularity and prices.

SEO Monitoring

There are numerous SEO tools such as Ahrefs, Seobility, SEMrush, etc., which are used for competitive analysis and for pulling data from your client's websites.

Search engines

There are some big IT companies whose business solely depends on web scraping.

Sales and Marketing

The data gathered through web scraping can be used by marketers to analyze different niches and competitors or by the sales specialist for selling content marketing or social media promotion services.

Why Python for Web Scraping?

Python is one of the most popular languages for web scraping as it can handle most of the web crawling related tasks very easily.

Below are some of the points on why to choose python for web scraping −

Ease of Use

As most of the developers agree that python is very easy to code. We don't have to use any curly braces "{ }" or semi-colons ";" anywhere, which makes it more readable and easy-to-use while developing web scrapers.

Huge Library Support

Python provides huge set of libraries for different requirements, so it is appropriate for web scraping as well as for data visualization, machine learning, etc.

Easily Explicable Syntax

Python is a very readable programming language as python syntax are easy to understand. Python is very expressive and code indentation helps the users to differentiate different blocks or scopes in the code.

Dynamically-typed language

Python is a dynamically-typed language, which means the data assigned to a variable tells, what type of variable it is. It saves lot of time and makes work faster.

Huge Community

Python community is huge which helps you wherever you stuck while writing code.

Beautiful Soup - Installation

Beautiful Soup is a library that makes it easy to scrape information from web pages. It sits atop an HTML or XML parser, providing Pythonic idioms for iterating, searching, and modifying the parse tree.

BeautifulSoup package is not a part of Python's standard library, hence it must be installed. Before installing the latest version, let us create a virtual environment, as per Python's recommended method.

A virtual environment allows us to create an isolated working copy of python for a specific project without affecting the outside setup.

We shall use venv module in Python's standard library to create virtual environment. PIP is included by default in Python version 3.4 or later.

Use the following command to create virtual environment in Windows

D:\beautiful_soup>py -m venv myenv

On Ubuntu Linux, update the APT repo and install venv if required before creating virtual environment

mvl@GNVBGL3:~ $ sudo apt update && sudo apt upgrade -y mvl@GNVBGL3:~ $ sudo apt install python3-venv

Then use the following command to create a virtual environment

mvl@GNVBGL3:~ $ sudo python3 -m venv myenv

You need to activate the virtual environment. On Windows use the command

D:\beautiful_soup>cd myenv D:\beautiful_soupmyenv>scripts\activate (myenv) D:\beautiful_soup\myenv>

On Ubuntu Linux, use following command to activate the virtual environment

mvl@GNVBGL3:~$ cd myenv mvl@GNVBGL3:~/myenv$ source bin/activate (myenv) mvl@GNVBGL3:~/myenv$

Name of the virtual environment appears in the parenthesis. Now that it is activated, we can now install BeautifulSoup in it.

D:\beautiful_soup\myenv\pythonProject> pip3 install beautifulsoup4

Collecting beautifulsoup4

Obtaining dependency information for beautifulsoup4 from https://files.pythonhosted.org/packages/1a/39/47f9197bdd44df24d67ac8893641e16f386c984a0619ef2ee4c51fbbc019/beautifulsoup4-4.14.3-py3-none-any.whl.metadata

Downloading beautifulsoup4-4.14.3-py3-none-any.whl.metadata (3.8 kB)

Collecting soupsieve>=1.6.1 (from beautifulsoup4)

Obtaining dependency information for soupsieve>=1.6.1 from https://files.pythonhosted.org/packages/14/a0/bb38d3b76b8cae341dad93a2dd83ab7462e6dbcdd84d43f54ee60a8dc167/soupsieve-2.8-py3-none-any.whl.metadata

Downloading soupsieve-2.8-py3-none-any.whl.metadata (4.6 kB)

Collecting typing-extensions>=4.0.0 (from beautifulsoup4)

Obtaining dependency information for typing-extensions>=4.0.0 from https://files.pythonhosted.org/packages/18/67/36e9267722cc04a6b9f15c7f3441c2363321a3ea07da7ae0c0707beb2a9c/typing_extensions-4.15.0-py3-none-any.whl.metadata

Downloading typing_extensions-4.15.0-py3-none-any.whl.metadata (3.3 kB)

Downloading beautifulsoup4-4.14.3-py3-none-any.whl (107 kB)

ââââââââââââââââââââââââââââââââââââââââ 107.7/107.7 kB 3.1 MB/s eta 0:00:00

Downloading soupsieve-2.8-py3-none-any.whl (36 kB)

Downloading typing_extensions-4.15.0-py3-none-any.whl (44 kB)

ââââââââââââââââââââââââââââââââââââââââ 44.6/44.6 kB ? eta 0:00:00

Installing collected packages: typing-extensions, soupsieve, beautifulsoup4

Successfully installed beautifulsoup4-4.14.3 soupsieve-2.8 typing-extensions-4.15.0

Note that the latest version of Beautifulsoup4 is 4.12.2 and requires Python 3.8 or later.

If you don't have easy_install or pip installed, you can download the Beautiful Soup 4 source tarball and install it with setup.py.

(myenv) mvl@GNVBGL3:~/myenv$ python setup.py install

To check if Beautifulsoup is properly install, enter following commands in Python terminal −

>>> import bs4 >>> bs4.__version__ '4.14.3'

If the installation hasn't been successful, you will get ModuleNotFoundError.

You will also need to install requests library. It is a HTTP library for Python.

pip3 install requests

Installing a Parser

By default, Beautiful Soup supports the HTML parser included in Python's standard library, however it also supports many external third party python parsers like lxml parser or html5lib parser.

To install lxml or html5lib parser, use the command:

pip3 install lxml pip3 install html5lib

These parsers have their advantages and disadvantages as shown below −

Parser: Python's html.parser

Usage − BeautifulSoup(markup, "html.parser")

Advantages

- Batteries included

- Decent speed

- Lenient (As of Python 3.2)

Disadvantages

- Not as fast as lxml, less lenient than html5lib.

Parser: lxml's HTML parser

Usage − BeautifulSoup(markup, "lxml")

Advantages

- Very fast

- Lenient

Disadvantages

- External C dependency

Parser: lxml's XML parser

Usage − BeautifulSoup(markup, "lxml-xml")

Or BeautifulSoup(markup, "xml")

Advantages

- Very fast

- The only currently supported XML parser

Disadvantages

- External C dependency

Parser: html5lib

Usage − BeautifulSoup(markup, "html5lib")

Advantages

- Extremely lenient

- Parses pages the same way a web browser does

- Creates valid HTML5

Disadvantages

- Very slow

- External Python dependency

Beautiful Soup - Souping the page

It is time to test our Beautiful Soup package in one of the html pages (taking web page - https://www.tutorialspoint.com/index.htm, you can choose any-other web page you want) and extract some information from it.

In the below code, we are trying to extract the title from the webpage −

Example - Extracting Title from a Web Page

tester.py

from bs4 import BeautifulSoup import requests url = "https://www.tutorialspoint.com/index.htm" req = requests.get(url) soup = BeautifulSoup(req.content, "html.parser") print(soup.title)

Output

Run the above code and verify the output.

<title>Online Courses and eBooks Library<title>

One common task is to extract all the URLs within a webpage. For that we just need to add the below line of code −

for link in soup.find_all('a'):

print(link.get('href'))

Output

Shown below is the partial output of the above loop −

https://www.tutorialspoint.com/index.htm https://www.tutorialspoint.com/codingground.htm https://www.tutorialspoint.com/about/about_careers.htm https://www.tutorialspoint.com/whiteboard.htm https://www.tutorialspoint.com/online_dev_tools.htm https://www.tutorialspoint.com/business/index.asp https://www.tutorialspoint.com/market/teach_with_us.jsp https://www.facebook.com/tutorialspointindia https://www.instagram.com/tutorialspoint_/ https://twitter.com/tutorialspoint https://www.youtube.com/channel/UCVLbzhxVTiTLiVKeGV7WEBg https://www.tutorialspoint.com/categories/development https://www.tutorialspoint.com/categories/it_and_software https://www.tutorialspoint.com/categories/data_science_and_ai_ml https://www.tutorialspoint.com/categories/cyber_security https://www.tutorialspoint.com/categories/marketing https://www.tutorialspoint.com/categories/office_productivity https://www.tutorialspoint.com/categories/business https://www.tutorialspoint.com/categories/lifestyle https://www.tutorialspoint.com/latest/prime-packs https://www.tutorialspoint.com/market/index.asp https://www.tutorialspoint.com/latest/ebooks

To parse a web page stored locally in the current working directory, obtain the file object pointing to the html file, and use it as argument to the BeautifulSoup() constructor.

Example - Parsing a Local Web Page

tester.py

from bs4 import BeautifulSoup

with open("index.html") as fp:

soup = BeautifulSoup(fp, 'html.parser')

print(soup)

Output

<html> <head> <title>Hello World</title> </head> <body> <h1 style="text-align:center;">Hello World</h1> </body> </html>

Example - Parsing a HTML Script passed as String

You can also use a string that contains HTML script as constructor's argument as follows −

tester.py

from bs4 import BeautifulSoup

html = '''

<html>

<head>

<title>Hello World</title>

</head>

<body>

<h1 style="text-align:center;">Hello World</h1>

</body>

</html>

'''

soup = BeautifulSoup(html, 'html.parser')

print(soup)

Output

<html> <head> <title>Hello World</title> </head> <body> <h1 style="text-align:center;">Hello World</h1> </body> </html>

Beautiful Soup uses the best available parser to parse the document. It will use an HTML parser unless specified otherwise.

Beautiful Soup - Kinds of objects

When we pass a html document or string to a beautifulsoup constructor, beautifulsoup basically converts a complex html page into different python objects. Below we are going to discuss four major kinds of objects defined in bs4 package.

- Tag

- NavigableString

- BeautifulSoup

- Comments

Tag Object

A HTML tag is used to define various types of content. A tag object in BeautifulSoup corresponds to an HTML or XML tag in the actual page or document.

Example - Getting type of Tag.

from bs4 import BeautifulSoup

soup = BeautifulSoup('<b class="boldest">TutorialsPoint</b>', 'lxml')

tag = soup.html

print (type(tag))

Output

<class 'bs4.element.Tag'>

Tags contain lot of attributes and methods and two important features of a tag are its name and attributes.

Name (tag.name)

Every tag contains a name and can be accessed through '.name' as suffix. tag.name will return the type of tag it is.

Example - Printing Name of the Tag

from bs4 import BeautifulSoup

soup = BeautifulSoup('<b class="boldest">TutorialsPoint</b>', 'lxml')

tag = soup.html

print (tag.name)

Output

html

However, if we change the tag name, same will be reflected in the HTML markup generated by the BeautifulSoup.

Example - Changing the tag name

from bs4 import BeautifulSoup

soup = BeautifulSoup('<b class="boldest">TutorialsPoint</b>', 'lxml')

tag = soup.html

tag.name = "strong"

print (tag)

Output

<strong><body><b class="boldest">TutorialsPoint</b></body></strong>

Attributes (tag.attrs)

A tag object can have any number of attributes. In the above example, the tag <b class="boldest"> has an attribute 'class' whose value is "boldest". Anything that is NOT tag, is basically an attribute and must contain a value. A dictionary of attributes and their values is returned by "attrs". You can access the attributes either through accessing the keys too.

In the example below, the string argument for Beautifulsoup() constructor contains HTML input tag. The attributes of input tag are returned by "attr".

Example - Printing attributes of a Tag

from bs4 import BeautifulSoup

soup = BeautifulSoup('<input type="text" name="name" value="Raju">', 'lxml')

tag = soup.input

print (tag.attrs)

Output

{'type': 'text', 'name': 'name', 'value': 'Raju'}

We can do all kind of modifications to our tag's attributes (add/remove/modify), using dictionary operators or methods.

In the following example, the value tag is updated. The updated HTML string shows changes.

Example - Updating tag value

from bs4 import BeautifulSoup

soup = BeautifulSoup('<input type="text" name="name" value="Raju">', 'lxml')

tag = soup.input

print (tag.attrs)

tag['value']='Ravi'

print (soup)

Output

<html><body><input name="name" type="text" value="Ravi"/></body></html>

We add a new id tag, and delete the value tag.

Example - Adding/Deleting Tag

from bs4 import BeautifulSoup

soup = BeautifulSoup('<input type="text" name="name" value="Raju">', 'lxml')

tag = soup.input

tag['id']='nm'

del tag['value']

print (soup)

Output

<html><body><input id="nm" name="name" type="text"/></body></html>

Multi-valued attributes

Some of the HTML5 attributes can have multiple values. Most commonly used is the class-attribute which can have multiple CSS-values. Others include 'rel', 'rev', 'headers', 'accesskey' and 'accept-charset'. The multi-valued attributes in beautiful soup are shown as list.

Example - Using multivalued attributes

from bs4 import BeautifulSoup

css_soup = BeautifulSoup('<p class="body"></p>', 'lxml')

print ("css_soup.p['class']:", css_soup.p['class'])

css_soup = BeautifulSoup('<p class="body bold"></p>', 'lxml')

print ("css_soup.p['class']:", css_soup.p['class'])

Output

css_soup.p['class']: ['body'] css_soup.p['class']: ['body', 'bold']

However, if any attribute contains more than one value but it is not multi-valued attributes by any-version of HTML standard, beautiful soup will leave the attribute alone −

Example - Invalid attribute values

from bs4 import BeautifulSoup

id_soup = BeautifulSoup('<p id="body bold"></p>', 'lxml')

print ("id_soup.p['id']:", id_soup.p['id'])

print ("type(id_soup.p['id']):", type(id_soup.p['id']))

Output

id_soup.p['id']: body bold type(id_soup.p['id']): <class 'str'>

NavigableString object

Usually, a certain string is placed in opening and closing tag of a certain type. The HTML engine of the browser applies the intended effect on the string while rendering the element. For example , in <b>Hello World</b>, you find a string in the middle of <b> and </b> tags so that it is rendered in bold.

The NavigableString object represents the contents of a tag. It is an object of bs4.element.NavigableString class. To access the contents, use ".string" with tag.

Example - Printing type of NavigableString

from bs4 import BeautifulSoup

soup = BeautifulSoup("<h2 id='message'>Hello, Tutorialspoint!</h2>", 'html.parser')

print (soup.string)

print (type(soup.string))

Output

Hello, Tutorialspoint! <class 'bs4.element.NavigableString'>

A NavigableString object is similar to a Python Unicode string. some of its features support Navigating the tree and Searching the tree. A NavigableString can be converted to a Unicode string with str() function.

Example - Converting NavigableString to String

from bs4 import BeautifulSoup

soup = BeautifulSoup("<h2 id='message'>Hello, Tutorialspoint!</h2>",'html.parser')

tag = soup.h2

string = str(tag.string)

print (string)

Output

Hello, Tutorialspoint!

Just as a Python string, which is immutable, the NavigableString also can't be modified in place. However, use replace_with() to replace the inner string of a tag with another.

Example - Updating a NavigableString

from bs4 import BeautifulSoup

soup = BeautifulSoup("<h2 id='message'>Hello, Tutorialspoint!</h2>",'html.parser')

tag = soup.h2

tag.string.replace_with("OnLine Tutorials Library")

print (tag.string)

Output

OnLine Tutorials Library

BeautifulSoup object

The BeautifulSoup object represents the entire parsed object. However, it can be considered to be similar to Tag object. It is the object created when we try to scrape a web resource. Because it is similar to a Tag object, it supports the functionality required to parse and search the document tree.

Example - Usage of BeautifulSoup

from bs4 import BeautifulSoup

fp = open("index.html")

soup = BeautifulSoup(fp, 'html.parser')

print (soup)

print (soup.name)

print ('type:',type(soup))

Output

<html> <head> <title>TutorialsPoint</title> </head> <body> <h2>Departmentwise Employees</h2> <ul> <li>Accounts</li> <ul> <li>Anand</li> <li>Mahesh</li> </ul> <li>HR</li> <ul> <li>Rani</li> <li>Ankita</li> </ul> </ul> </body> </html> [document] type: <class 'bs4.BeautifulSoup'>

The name property of BeautifulSoup object always returns [document].

Two parsed documents can be combined if you pass a BeautifulSoup object as an argument to a certain function such as replace_with().

Example - Updating content using BeautifulSoup

from bs4 import BeautifulSoup

obj1 = BeautifulSoup("<book><title>Python</title></book>", features="xml")

obj2 = BeautifulSoup("<b>Beautiful Soup parser</b>", "lxml")

obj2.find('b').replace_with(obj1)

print (obj2)

Output

<html><body><book><title>Python</title></book></body></html>

Comment object

Any text written between <!-- and --> in HTML as well as XML document is treated as comment. BeautifulSoup can detect such commented text as a Comment object.

Example - Usage of Comment in Beautiful Soup

from bs4 import BeautifulSoup markup = "<b><!--This is a comment text in HTML--></b>" soup = BeautifulSoup(markup, 'html.parser') comment = soup.b.string print (comment, type(comment))

Output

This is a comment text in HTML <class 'bs4.element.Comment'>

The Comment object is a special type of NavigableString object. The prettify() method displays the comment text with special formatting −

Example - Formatting a Comment

print (soup.b.prettify())

Output

<b> <!--This is a comment text in HTML--> </b>

Beautiful Soup - Inspect Data Source

In order to scrape a web page with BeautifulSoup and Python, your first step for any web scraping project should be to explore the website that you want to scrape. So, first visit the website to understand the site structure before you start extracting the information that's relevant for you.

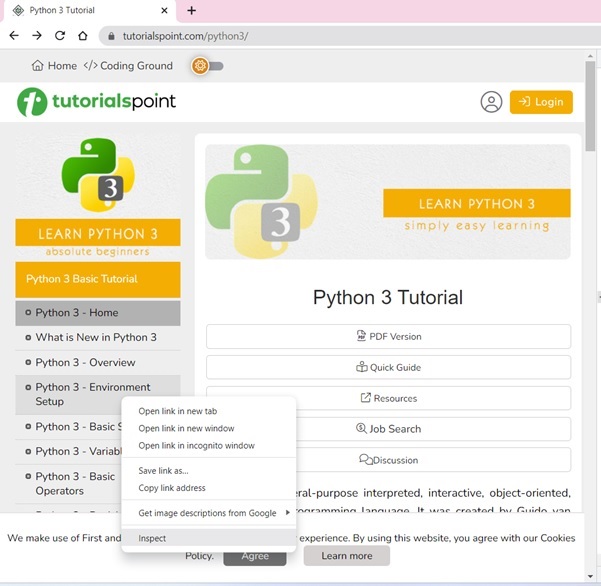

Let us visit TutorialsPoint's Python Tutorial home page. Open https://www.tutorialspoint.com/python/index.htm in your browser.

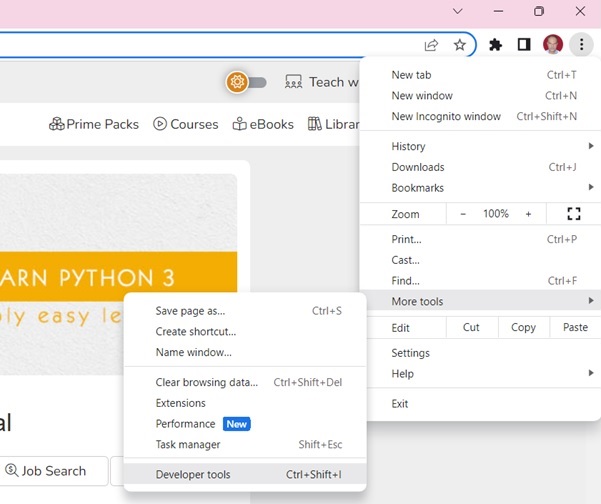

Use Developer tools can help you understand the structure of a website. All modern browsers come with developer tools installed.

If using Chrome browser, open the Developer Tools from the top-right menu button (⋮) and selecting More Tools → Developer Tools.

With Developer tools, you can explore the site's document object model (DOM) to better understand your source. Select the Elements tab in developer tools. You'll see a structure with clickable HTML elements.

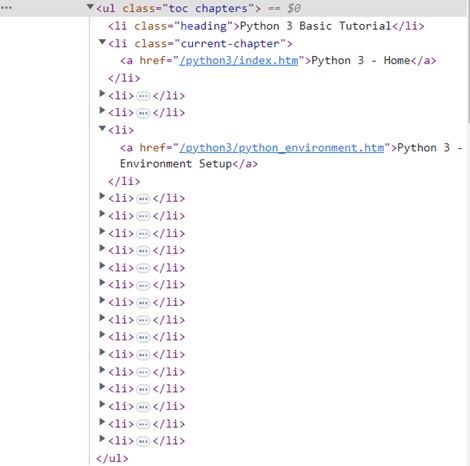

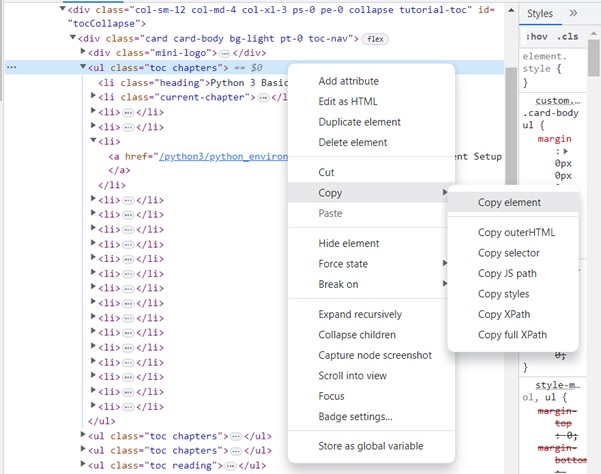

The Tutorial page shows the table of contents in the left sidebar. Right click on any chapter and choose Inspect option.

For the Elements tab, locate the tag that corresponds to the TOC list, as shown in the figure below −

Right click on the HTML element, copy the HTML element, and paste it in any editor.

The HTML script of the <ul>..</ul> element is now obtained.

<ul class="toc chapters"> <li class="heading">Python 3 Basic Tutorial</li> <li class="current-chapter"><a href="/python3/index.htm">Python 3 - Home</a></li> <li><a href="/python3/python3_whatisnew.htm">What is New in Python 3</a></li> <li><a href="/python3/python_overview.htm">Python 3 - Overview</a></li> <li><a href="/python3/python_environment.htm">Python 3 - Environment Setup</a></li> <li><a href="/python3/python_basic_syntax.htm">Python 3 - Basic Syntax</a></li> <li><a href="/python3/python_variable_types.htm">Python 3 - Variable Types</a></li> <li><a href="/python3/python_basic_operators.htm">Python 3 - Basic Operators</a></li> <li><a href="/python3/python_decision_making.htm">Python 3 - Decision Making</a></li> <li><a href="/python3/python_loops.htm">Python 3 - Loops</a></li> <li><a href="/python3/python_numbers.htm">Python 3 - Numbers</a></li> <li><a href="/python3/python_strings.htm">Python 3 - Strings</a></li> <li><a href="/python3/python_lists.htm">Python 3 - Lists</a></li> <li><a href="/python3/python_tuples.htm">Python 3 - Tuples</a></li> <li><a href="/python3/python_dictionary.htm">Python 3 - Dictionary</a></li> <li><a href="/python3/python_date_time.htm">Python 3 - Date & Time</a></li> <li><a href="/python3/python_functions.htm">Python 3 - Functions</a></li> <li><a href="/python3/python_modules.htm">Python 3 - Modules</a></li> <li><a href="/python3/python_files_io.htm">Python 3 - Files I/O</a></li> <li><a href="/python3/python_exceptions.htm">Python 3 - Exceptions</a></li> </ul>

We can now load this script in a BeautifulSoup object to parse the document tree.

Beautiful Soup - Scrape HTML Content

The process of extracting data from websites is called Web scraping. A web page may have urls, Email addresses, images or any other content, which we can be stored in a file or database. Searching a website manually is cumbersome process. There are different web scaping tools that automate the process.

Web scraping is is sometimes prohibited by the use of 'robots.txt' file. Some popular sites provide APIs to access their data in a structured way. Unethical web scraping may result in getting your IP blocked.

Python is widely used for web scraping. Python standard library has urllib package, which can be used to extract data from HTML pages. Since urllib module is bundled with the standard library, it need not be installed.

The urllib package is an HTTP client for python programming language. The urllib.request module is usefule when we want to open and read URLs. Other module in urllib package are −

urllib.error defines the exceptions and errors raised by the urllib.request command.

urllib.parse is used for parsing URLs.

urllib.robotparser is used for parsing robots.txt files.

Use the urlopen() function in urllib module to read the content of a web page from a website.

import urllib.request

response = urllib.request.urlopen('http://python.org/')

html = response.read()

You can also use the requests library for this purpose. You need to install it before using.

pip3 install requests

In the below code, the homepage of https://www.tutorialspoint.com is scraped −

from bs4 import BeautifulSoup import requests url = "https://www.tutorialspoint.com/index.htm" req = requests.get(url)

The content obtained by either of the above two methods are then parsed with Beautiful Soup.

Beautiful Soup - Navigating By Tags

One of the important pieces of element in any piece of HTML document are tags, which may contain other tags/strings (tag's children). Beautiful Soup provides different ways to navigate and iterate over's tag's children.

Easiest way to search a parse tree is to search the tag by its name.

soup.head

The soup.head function returns the contents put inside the <head> .. </head> element of a HTML page.

Following code extracts the contents of <head> element

Example - Extracting Head

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

<script>

document.write("Welcome to TutorialsPoint");

</script>

</head>

<body>

<h1>Tutorialspoint Online Library</h1>

<p><b>It's all Free</b></p>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

print(soup.head)

Output

<head>

<title>TutorialsPoint</title>

<script>

document.write("Welcome to TutorialsPoint");

</script>

</head>

soup.body

Similarly, to return the contents of body part of HTML page, use soup.body

Example - Extracting html Body

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

<script>

document.write("Welcome to TutorialsPoint");

</script>

</head>

<body>

<h1>Tutorialspoint Online Library</h1>

<p><b>It's all Free</b></p>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

print (soup.body)

Output

<body> <h1>Tutorialspoint Online Library</h1> <p><b>It's all Free</b></p> </body>

You can also extract specific tag (like first <h1> tag) in the <body> tag.

Example - Extracting specific tag

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

<script>

document.write("Welcome to TutorialsPoint");

</script>

</head>

<body>

<h1>Tutorialspoint Online Library</h1>

<p><b>It's all Free</b></p>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

print(soup.body.h1)

Output

<h1>Tutorialspoint Online Library</h1>

soup.p

Our HTML file contains a <p> tag. We can extract the contents of this tag

Example - Extract content of <p> tag

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

<script>

document.write("Welcome to TutorialsPoint");

</script>

</head>

<body>

<h1>Tutorialspoint Online Library</h1>

<p><b>It's all Free</b></p>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

print(soup.p)

Output

<p><b>It's all Free</b></p>

Tag.contents

A Tag object may have one or more PageElements. The Tag object's contents property returns a list of all elements included in it.

Let us find the elements in <head> tag of our index.html file.

Example - Finding elements of <head> tag

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

<script>

document.write("Welcome to TutorialsPoint");

</script>

</head>

<body>

<h1>Tutorialspoint Online Library</h1>

<p><b>It's all Free</b></p>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

tag = soup.head

print (tag.contents)

Output

['\n',

<title>TutorialsPoint</title>,

'\n',

<script>

document.write("Welcome to TutorialsPoint");

</script>,

'\n']

Tag.children

The structure of tags in a HTML script is hierarchical. The elements are nested one inside the other. For example, the top level <HTML> tag includes <HEAD> and <BODY> tags, each may have other tags in it.

The Tag object has a children property that returns a list iterator object containing the enclosed PageElements.

To demonstrate the children property, we shall use the following HTML script (index.html). In the <body> section, there are two <ul> list elements, one nested in another. In other words, the body tag has top level list elements, and each list element has another list under it.

The following Python code gives a list of all the children elements of top level <ul> tag.

Example - Getting List of chidren elements

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

</head>

<body>

<h2>Departmentwise Employees</h2>

<ul>

<li>Accounts</li>

<ul>

<li>Anand</li>

<li>Mahesh</li>

</ul>

<li>HR</li>

<ul>

<li>Rani</li>

<li>Ankita</li>

</ul>

</ul>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

tag = soup.ul

print (list(tag.children))

Output

['\n', <li>Accounts</li>, '\n', <ul> <li>Anand</li> <li>Mahesh</li> </ul>, '\n', <li>HR</li>, '\n', <ul> <li>Rani</li> <li>Ankita</li> </ul>, '\n']

Since the .children property returns a list_iterator, we can use a for loop to traverse the hierarchy.

Example - Traversing a hiearchy

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

</head>

<body>

<h2>Departmentwise Employees</h2>

<ul>

<li>Accounts</li>

<ul>

<li>Anand</li>

<li>Mahesh</li>

</ul>

<li>HR</li>

<ul>

<li>Rani</li>

<li>Ankita</li>

</ul>

</ul>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

tag = soup.ul

for child in tag.children:

print (child)

Output

<li>Accounts</li> <ul> <li>Anand</li> <li>Mahesh</li> </ul> <li>HR</li> <ul> <li>Rani</li> <li>Ankita</li> </ul>

Tag.find_all()

This method returns a result set of contents of all the tags matching with the argument tag provided.

The following code lists all the elements with <a> tag

Example - List all elements with <a> tag

from bs4 import BeautifulSoup

html = """

<html>

<body>

<h1>Tutorialspoint Online Library</h1>

<p><b>It's all Free</b></p>

<a class="prog" href="https://www.tutorialspoint.com/java/java_overview.htm" id="link1">Java</a>

<a class="prog" href="https://www.tutorialspoint.com/cprogramming/index.htm" id="link2">C</a>

<a class="prog" href="https://www.tutorialspoint.com/python/index.htm" id="link3">Python</a>

<a class="prog" href="https://www.tutorialspoint.com/javascript/javascript_overview.htm" id="link4">JavaScript</a>

<a class="prog" href="https://www.tutorialspoint.com/ruby/index.htm" id="link5">C</a>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

result = soup.find_all("a")

print (result)

Output

[ <a class="prog" href="https://www.tutorialspoint.com/java/java_overview.htm" id="link1">Java</a>, <a class="prog" href="https://www.tutorialspoint.com/cprogramming/index.htm" id="link2">C</a>, <a class="prog" href="https://www.tutorialspoint.com/python/index.htm" id="link3">Python</a>, <a class="prog" href="https://www.tutorialspoint.com/javascript/javascript_overview.htm" id="link4">JavaScript</a>, <a class="prog" href="https://www.tutorialspoint.com/ruby/index.htm" id="link5">C</a> ]

Beautiful Soup - Find Elements by ID

In an HTML document, usually each element is assigned a unique ID. This enables the value of an element to be extracted by a front-end code such as JavaScript function.

With BeautifulSoup, you can find the contents of a given element by its ID. There are two methods by which this can be achieved - find() as well as find_all(), and select()

Using find() method

The find() method of BeautifulSoup object searches for first element that satisfies the given criteria as an argument.

The following Python code finds the element with its id as nm

Example - Find an Element by ID

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

</head>

<body>

<form>

<input type = 'text' id = 'nm' name = 'name'>

<input type = 'text' id = 'age' name = 'age'>

<input type = 'text' id = 'marks' name = 'marks'>

</form>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

obj = soup.find(id = 'nm')

print (obj)

Output

<input id="nm" name="name" type="text"/>

Using find_all() method

The find_all() method also accepts a filter argument. It returns a list of all the elements with the given id. In a certain HTML document, usually a single element with a particular id. Hence, using find() instead of find_all() is preferrable to search for a given id.

Example - Find elements by ID

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

</head>

<body>

<form>

<input type = 'text' id = 'nm' name = 'name'>

<input type = 'text' id = 'age' name = 'age'>

<input type = 'text' id = 'marks' name = 'marks'>

</form>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

obj = soup.find_all(id = 'nm')

print (obj)

Output

[<input id="nm" name="name" type="text"/>]

Note that the find_all() method returns a list. The find_all() method also has a limit parameter. Setting limit=1 to find_all() is equivalent to find()

obj = soup.find_all(id = 'nm', limit=1)

Using select() method

The select() method in BeautifulSoup class accepts CSS selector as an argument. The # symbol is the CSS selector for id. It followed by the value of required id is passed to select() method. It works as the find_all() method.

Example - Find Element using CSS Selector

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

</head>

<body>

<form>

<input type = 'text' id = 'nm' name = 'name'>

<input type = 'text' id = 'age' name = 'age'>

<input type = 'text' id = 'marks' name = 'marks'>

</form>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

obj = soup.select("#nm")

print (obj)

Output

[<input id="nm" name="name" type="text"/>]

Using select_one() method

Like the find_all() method, the select() method also returns a list. There is also a select_one() method to return the first tag of the given argument.

Example - Find Elements by CSS Selector

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

</head>

<body>

<form>

<input type = 'text' id = 'nm' name = 'name'>

<input type = 'text' id = 'age' name = 'age'>

<input type = 'text' id = 'marks' name = 'marks'>

</form>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

obj = soup.select_one("#nm")

print (obj)

Output

<input id="nm" name="name" type="text"/>

Beautiful Soup - Find Elements by Class

CSS (cascaded Style sheets) is a tool for designing the appearance of HTML elements. CSS rules control the different aspects of HTML element such as size, color, alignment etc.. Applying styles is more effective than defining HTML element attributes. You can apply styling rules to each HTML element. Instead of applying style to each element individually, CSS classes are used to apply similar styling to groups of HTML elements to achieve uniform web page appearance. In BeautifulSoup, it is possible to find tags styled with CSS class. In this chapter, we shall use the following methods to search for elements for a specified CSS class −

- find_all() and find() methods

- select() and select_one() methods

Class in CSS

A class in CSS is a collection of attributes specifying the different features related to appearance, such as font type, size and color, background color, alignment etc. Name of the class is prefixed with a dot (.) while declaring it.

.class {

css declarations;

}

A CSS class may be defined inline, or in a separate css file which needs to be included in the HTML script. A typical example of a CSS class could be as follows −

.blue-text {

color: blue;

font-weight: bold;

}

You can search for HTML elements defined with a certain class style with the help of following BeautifulSoup methods.

Using find() and find_all()

To search for elements with a certain CSS class used in a tag, use attrs property of Tag object as follows −

Example - Finding elements by a CSS class using attr property

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

</head>

<body>

<h2 class="heading">Departmentwise Employees</h2>

<ul>

<li class="mainmenu">Accounts</li>

<ul>

<li class="submenu">Anand</li>

<li class="submenu">Mahesh</li>

</ul>

<li class="mainmenu">HR</li>

<ul>

<li class="submenu">Rani</li>

<li class="submenu">Ankita</li>

</ul>

</ul>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

obj = soup.find_all(attrs={"class": "mainmenu"})

print (obj)

Output

[<li class="mainmenu">Accounts</li>, <li class="mainmenu">HR</li>]

The result is a list of all the elements with mainmenu class

To fetch the list of elements with any of the CSS classes mentioned in in attrs property, change the find_all() statement to −

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

</head>

<body>

<h2 class="heading">Departmentwise Employees</h2>

<ul>

<li class="mainmenu">Accounts</li>

<ul>

<li class="submenu">Anand</li>

<li class="submenu">Mahesh</li>

</ul>

<li class="mainmenu">HR</li>

<ul>

<li class="submenu">Rani</li>

<li class="submenu">Ankita</li>

</ul>

</ul>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

obj = soup.find_all(attrs={"class": ["mainmenu", "submenu"]})

print (obj)

This results into a list of all the elements with any of CSS classes used above.

[ <li class="mainmenu">Accounts</li>, <li class="submenu">Anand</li>, <li class="submenu">Mahesh</li>, <li class="mainmenu">HR</li>, <li class="submenu">Rani</li>, <li class="submenu">Ankita</li> ]

Using select() and select_one()

You can also use select() method with the CSS selector as the argument. The (.) symbol followed by the name of the class is used as the CSS selector.

Example - Finding elements using a CSS class directly

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

</head>

<body>

<h2 class="heading">Departmentwise Employees</h2>

<ul>

<li class="mainmenu">Accounts</li>

<ul>

<li class="submenu">Anand</li>

<li class="submenu">Mahesh</li>

</ul>

<li class="mainmenu">HR</li>

<ul>

<li class="submenu">Rani</li>

<li class="submenu">Ankita</li>

</ul>

</ul>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

obj = soup.select(".heading")

print (obj)

Output

[<h2 class="heading">Departmentwise Employees</h2>]

The select_one() method returns the first element found with the given class.

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

</head>

<body>

<h2 class="heading">Departmentwise Employees</h2>

<ul>

<li class="mainmenu">Accounts</li>

<ul>

<li class="submenu">Anand</li>

<li class="submenu">Mahesh</li>

</ul>

<li class="mainmenu">HR</li>

<ul>

<li class="submenu">Rani</li>

<li class="submenu">Ankita</li>

</ul>

</ul>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

obj = soup.select_one(".submenu")

print (obj)

Output

<li class="submenu">Anand</li>

Beautiful Soup - Find Elements by Attributes

Both find() and find_all() methods are meant to find one or all the tags in the document as per the arguments passed to these methods. You can pass attrs parameter to these functions. The value of attrs must be a dictionary with one or more tag attributes and their values.

Using find_all() method

The following program returns a list of all the tags having input type="text" attribute.

Example - Finding tags having input type as text

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

</head>

<body>

<h1>TutorialsPoint</h1>

<form>

<input type = 'text' id = 'nm' name = 'name'>

<input type = 'text' id = 'age' name = 'age'>

<input type = 'text' id = 'marks' name = 'marks'>

</form>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

obj = soup.find_all(attrs={"type":'text'})

print (obj)

Output

[<input id="nm" name="name" type="text"/>, <input id="age" name="age" type="text"/>, <input id="marks" name="marks" type="text"/>]

Using find() method

The find() method returns the first tag in the parsed document that has the given attributes.

Example - Finding first tag having name as marks

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

</head>

<body>

<form>

<input type = 'text' id = 'nm' name = 'name'>

<input type = 'text' id = 'age' name = 'age'>

<input type = 'text' id = 'marks' name = 'marks'>

</form>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

obj = soup.find(attrs={"name":'marks'})

print (obj)

Output

<input id="marks" name="marks" type="text"/>

Using select() method

The select() method can be called by passing the attributes to be compared against. The attributes must be put in a list object. It returns a list of all tags that have the given attribute.

In the following code, the select() method returns all the tags with type attribute.

Example - Finding all tags with given type

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

</head>

<body>

<form>

<input type = 'text' id = 'nm' name = 'name'>

<input type = 'text' id = 'age' name = 'age'>

<input type = 'text' id = 'marks' name = 'marks'>

</form>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

obj = soup.select("[type]")

print (obj)

Output

[<input id="nm" name="name" type="text"/>, <input id="age" name="age" type="text"/>, <input id="marks" name="marks" type="text"/>]

Using select_one() method

The select_one() is method is similar, except that it returns the first tag satisfying the given filter.

Example - Finding first tag with given filter.

from bs4 import BeautifulSoup

html = """

<html>

<head>

<title>TutorialsPoint</title>

</head>

<body>

<form>

<input type = 'text' id = 'nm' name = 'name'>

<input type = 'text' id = 'age' name = 'age'>

<input type = 'text' id = 'marks' name = 'marks'>

</form>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

obj = soup.select_one("[name='marks']")

print(obj)

Output

<input id="marks" name="marks" type="text"/>

Beautiful Soup - Searching Tree

In this chapter, we shall discuss different methods in Beautiful Soup for navigating the HTML document tree in different directions - going up and down, sideways, and back and forth.

The name of required tag lets you navigate the parse tree. For example soup.head fetches you the <head> element −

Example - Extract a Head Tree

from bs4 import BeautifulSoup

html = """

<html><head><title>TutorialsPoint</title></head>

<body>

<p class="title"><b>Online Tutorials Library</b></p>

<p class="story">TutorialsPoint has an excellent collection of tutorials on:

<a href="https://tutorialspoint.com/Python" class="lang" id="link1">Python</a>,

<a href="https://tutorialspoint.com/Java" class="lang" id="link2">Java</a> and

<a href="https://tutorialspoint.com/PHP" class="lang" id="link3">PHP</a>;

Enhance your Programming skills.</p>

<p class="tutorial">...</p>

"""

soup = BeautifulSoup(html, 'html.parser')

print (soup.head.prettify())

Output

<head>

<title>

TutorialsPoint

</title>

</head>

Going down

A tag may contain strings or other tags enclosed in it. The .contents property of Tag object returns a list of all the children elements belonging to it.

Example - Getting tree of a content of a Tag

from bs4 import BeautifulSoup

html = """

<html><head><title>TutorialsPoint</title></head>

<body>

<p class="title"><b>Online Tutorials Library</b></p>

<p class="story">TutorialsPoint has an excellent collection of tutorials on:

<a href="https://tutorialspoint.com/Python" class="lang" id="link1">Python</a>,

<a href="https://tutorialspoint.com/Java" class="lang" id="link2">Java</a> and

<a href="https://tutorialspoint.com/PHP" class="lang" id="link3">PHP</a>;

Enhance your Programming skills.</p>

<p class="tutorial">...</p>

"""

soup = BeautifulSoup(html, 'html.parser')

tag = soup.head

print (list(tag.children))

Output

[<title>TutorialsPoint</title>]

The returned object is a list, although in this case, there is only a single child tag enclosed in head element.

Using .children property

The .children property also returns a list of all the enclosed elements in a tag. Below, all the elements in body tag are given as a list.

Example - List all enclosed elements

from bs4 import BeautifulSoup

html = """

<html><head><title>TutorialsPoint</title></head>

<body>

<p class="title"><b>Online Tutorials Library</b></p>

<p class="story">TutorialsPoint has an excellent collection of tutorials on:

<a href="https://tutorialspoint.com/Python" class="lang" id="link1">Python</a>,

<a href="https://tutorialspoint.com/Java" class="lang" id="link2">Java</a> and

<a href="https://tutorialspoint.com/PHP" class="lang" id="link3">PHP</a>;

Enhance your Programming skills.</p>

<p class="tutorial">...</p>

"""

soup = BeautifulSoup(html, 'html.parser')

tag = soup.body

print (list(tag.children))

Output

['\n', <p class="title"><b>Online Tutorials Library</b></p>, '\n', <p class="story">TutorialsPoint has an excellent collection of tutorials on: <a class="lang" href="https://tutorialspoint.com/Python" id="link1">Python</a>, <a class="lang" href="https://tutorialspoint.com/Java" id="link2">Java</a> and <a class="lang" href="https://tutorialspoint.com/PHP" id="link3">PHP</a>; Enhance your Programming skills.</p>, '\n', <p class="tutorial">...</p>, '\n']

Instead of getting them as a list, you can iterate over a tag's children using the .children generator −

Example - Iterating a List

from bs4 import BeautifulSoup

html = """

<html><head><title>TutorialsPoint</title></head>

<body>

<p class="title"><b>Online Tutorials Library</b></p>

<p class="story">TutorialsPoint has an excellent collection of tutorials on:

<a href="https://tutorialspoint.com/Python" class="lang" id="link1">Python</a>,

<a href="https://tutorialspoint.com/Java" class="lang" id="link2">Java</a> and

<a href="https://tutorialspoint.com/PHP" class="lang" id="link3">PHP</a>;

Enhance your Programming skills.</p>

<p class="tutorial">...</p>

"""

soup = BeautifulSoup(html, 'html.parser')

tag = soup.body

for child in tag.children:

print (child)

Output

<p class="title"><b>Online Tutorials Library</b></p> <p class="story">TutorialsPoint has an excellent collection of tutorials on: <a class="lang" href="https://tutorialspoint.com/Python" id="link1">Python</a>, <a class="lang" href="https://tutorialspoint.com/Java" id="link2">Java</a> and <a class="lang" href="https://tutorialspoint.com/PHP" id="link3">PHP</a>; Enhance your Programming skills.</p> <p class="tutorial">...</p>

Using .descendents attribute

The .contents and .children attributes only consider a tag's direct children. The .descendants attribute lets you iterate over all of a tag's children, recursively: its direct children, the children of its direct children, and so on.

The BeautifulSoup object is at the top of hierarchy of all the tags. Hence its .descendents property includes all the elements in the HTML string.

Example - Usage of descendents attributes

from bs4 import BeautifulSoup

html = """

<html><head><title>TutorialsPoint</title></head>

<body>

<p class="title"><b>Online Tutorials Library</b></p>

<p class="story">TutorialsPoint has an excellent collection of tutorials on:

<a href="https://tutorialspoint.com/Python" class="lang" id="link1">Python</a>,

<a href="https://tutorialspoint.com/Java" class="lang" id="link2">Java</a> and

<a href="https://tutorialspoint.com/PHP" class="lang" id="link3">PHP</a>;

Enhance your Programming skills.</p>

<p class="tutorial">...</p>

"""

soup = BeautifulSoup(html, 'html.parser')

print (soup.descendants)

Output

<generator object Tag.descendants at 0x7fb9333a9970>

The .descendents attribute returns a generator, which can be iterated with a for loop. Here, we list out the descendents of the head tag.

Example - Listing descendents of head tag.

from bs4 import BeautifulSoup

html = """

<html><head><title>TutorialsPoint</title></head>

<body>

<p class="title"><b>Online Tutorials Library</b></p>

<p class="story">TutorialsPoint has an excellent collection of tutorials on:

<a href="https://tutorialspoint.com/Python" class="lang" id="link1">Python</a>,

<a href="https://tutorialspoint.com/Java" class="lang" id="link2">Java</a> and

<a href="https://tutorialspoint.com/PHP" class="lang" id="link3">PHP</a>;

Enhance your Programming skills.</p>

<p class="tutorial">...</p>

"""

soup = BeautifulSoup(html, 'html.parser')

tag = soup.head

for element in tag.descendants:

print (element)

Output

<title>TutorialsPoint</title> TutorialsPoint

The head tag contains a title tag, which in turn encloses a NavigableString object TutorialsPoint. The <head> tag has only one child, but it has two descendants: the <title> tag and the <title> tag's child. But the BeautifulSoup object only has one direct child (the <html> tag), but it has many descendants.

Example - Getting Elements count

from bs4 import BeautifulSoup

html = """

<html><head><title>TutorialsPoint</title></head>

<body>

<p class="title"><b>Online Tutorials Library</b></p>

<p class="story">TutorialsPoint has an excellent collection of tutorials on:

<a href="https://tutorialspoint.com/Python" class="lang" id="link1">Python</a>,

<a href="https://tutorialspoint.com/Java" class="lang" id="link2">Java</a> and

<a href="https://tutorialspoint.com/PHP" class="lang" id="link3">PHP</a>;

Enhance your Programming skills.</p>

<p class="tutorial">...</p>

"""

soup = BeautifulSoup(html, 'html.parser')

tags = list(soup.descendants)

print (len(tags))

Output

27

Going Up

Just as you navigate the downstream of a document with children and descendents properties, BeautifulSoup offers .parent and .parent properties to navigate the upstream of a tag

Using .parent atttribute

every tag and every string has a parent tag that contains it. You can access an element's parent with the parent attribute. In our example, the <head> tag is the parent of the <title> tag.

Example - Using .parent attribute

from bs4 import BeautifulSoup

html = """

<html><head><title>TutorialsPoint</title></head>

<body>

<p class="title"><b>Online Tutorials Library</b></p>

<p class="story">TutorialsPoint has an excellent collection of tutorials on:

<a href="https://tutorialspoint.com/Python" class="lang" id="link1">Python</a>,

<a href="https://tutorialspoint.com/Java" class="lang" id="link2">Java</a> and

<a href="https://tutorialspoint.com/PHP" class="lang" id="link3">PHP</a>;

Enhance your Programming skills.</p>

<p class="tutorial">...</p>

"""

soup = BeautifulSoup(html, 'html.parser')

tag = soup.title

print (tag.parent)

Output

<head><title>TutorialsPoint</title></head>

Since the title tag contains a string (NavigableString), the parent for the string is title tag itself.

Example - Getting Title Tag

from bs4 import BeautifulSoup

html = """

<html><head><title>TutorialsPoint</title></head>

<body>

<p class="title"><b>Online Tutorials Library</b></p>

<p class="story">TutorialsPoint has an excellent collection of tutorials on:

<a href="https://tutorialspoint.com/Python" class="lang" id="link1">Python</a>,

<a href="https://tutorialspoint.com/Java" class="lang" id="link2">Java</a> and

<a href="https://tutorialspoint.com/PHP" class="lang" id="link3">PHP</a>;

Enhance your Programming skills.</p>

<p class="tutorial">...</p>

"""

soup = BeautifulSoup(html, 'html.parser')

tag = soup.title

string = tag.string

print (string.parent)

Output

<title>TutorialsPoint</title>

Using .parents property

You can iterate over all of an element's parents with .parents. This example uses .parents to travel from an <a> tag buried deep within the document, to the very top of the document. In the following code, we track the parents of the first <a> tag in the example HTML string.

Example - Usage of .parents property

from bs4 import BeautifulSoup

html = """

<html><head><title>TutorialsPoint</title></head>

<body>

<p class="title"><b>Online Tutorials Library</b></p>

<p class="story">TutorialsPoint has an excellent collection of tutorials on:

<a href="https://tutorialspoint.com/Python" class="lang" id="link1">Python</a>,

<a href="https://tutorialspoint.com/Java" class="lang" id="link2">Java</a> and

<a href="https://tutorialspoint.com/PHP" class="lang" id="link3">PHP</a>;

Enhance your Programming skills.</p>

<p class="tutorial">...</p>

"""

soup = BeautifulSoup(html, 'html.parser')

tag = soup.a

print (tag.string)

for parent in tag.parents:

print (parent.name)

Output

Python p body html [document]

Sideways

The HTML tags appearing at the same indentation level are called siblings. Consider the following HTML snippet

<p>

<b>

Hello

</b>

<i>

Python

</i>

</p>

In the outer <p> tag, we have <b> and <i> tags at the same indent level, hence they are called siblings. BeautifulSoup makes it possible to navigate between the tags at same level.

.next_sibling and .previous_sibling

These attributes respectively return the next tag at the same level, and the previous tag at same level.

Example - Getting Siblings

from bs4 import BeautifulSoup

soup = BeautifulSoup("<p><b>Hello</b><i>Python</i></p>", 'html.parser')

tag1 = soup.b

print ("next:",tag1.next_sibling)

tag2 = soup.i

print ("previous:",tag2.previous_sibling)

Output

next: <i>Python</i> previous: <b>Hello</b>

Since the <b> tag doesn't have a sibling to its left, and <i> tag doesn't have a sibling to its right, it returns None in both cases.

Example - Checking siblings if not present

from bs4 import BeautifulSoup

soup = BeautifulSoup("<p><b>Hello</b><i>Python</i></p>", 'html.parser')

tag1 = soup.b

print ("next:",tag1.previous_sibling)

tag2 = soup.i

print ("previous:",tag2.next_sibling)

Output

next: None previous: None

.next_siblings and .previous_siblings

If there are two or more siblings to the right or left of a tag, they can be navigated with the help of the .next_siblings and .previous_siblings attributes respectively. Both of them return generator object so that a for loop can be used to iterate.

Let us use the following HTML snippet for this purpose −

<p>

<b>

Excellent

</b>

<i>

Python

</i>

<u>

Tutorial

</u>

</p>

Use the following code to traverse next and previous sibling tags.

Example - Traversing siblings

from bs4 import BeautifulSoup

soup = BeautifulSoup("<p><b>Excellent</b><i>Python</i><u>Tutorial</u></p>", 'html.parser')

tag1 = soup.b

print ("next siblings:")

for tag in tag1.next_siblings:

print (tag)

print ("previous siblings:")

tag2 = soup.u

for tag in tag2.previous_siblings:

print (tag)

Output

next siblings: <i>Python</i> <u>Tutorial</u> previous siblings: <i>Python</i> <b>Excellent</b>

Back and forth

In Beautiful Soup, the next_element property returns the next string or tag in the parse tree. On the other hand, the previous_element property returns the previous string or tag in the parse tree. Sometimes, the return value of next_element and previous_element attributes is similar to next_sibling and previous_sibling properties.

.next_element and .previous_element

Example - Usage of .next_element and .previous_element

from bs4 import BeautifulSoup

html = """

<html><head><title>TutorialsPoint</title></head>

<body>

<p class="title"><b>Online Tutorials Library</b></p>

<p class="story">TutorialsPoint has an excellent collection of tutorials on:

<a href="https://tutorialspoint.com/Python" class="lang" id="link1">Python</a>,

<a href="https://tutorialspoint.com/Java" class="lang" id="link2">Java</a> and

<a href="https://tutorialspoint.com/PHP" class="lang" id="link3">PHP</a>;

Enhance your Programming skills.</p>

<p class="tutorial">...</p>

"""

soup = BeautifulSoup(html, 'html.parser')

tag = soup.find("a", id="link3")

print (tag.next_element)

tag = soup.find("a", id="link1")

print (tag.previous_element)

Output

PHP TutorialsPoint has an excellent collection of tutorials on:

The next_element after <a> tag with id = "link3" is the string PHP. Similarly, the previous_element returns the string before <a> tag with id = "link1".

.next_elements and .previous_elements

These attributes of the Tag object return generator respectively of all tags and strings after and before it.

Example - Iterating Next elements

from bs4 import BeautifulSoup

html = """

<html><head><title>TutorialsPoint</title></head>

<body>

<p class="title"><b>Online Tutorials Library</b></p>

<p class="story">TutorialsPoint has an excellent collection of tutorials on:

<a href="https://tutorialspoint.com/Python" class="lang" id="link1">Python</a>,

<a href="https://tutorialspoint.com/Java" class="lang" id="link2">Java</a> and

<a href="https://tutorialspoint.com/PHP" class="lang" id="link3">PHP</a>;

Enhance your Programming skills.</p>

<p class="tutorial">...</p>

"""

soup = BeautifulSoup(html, 'html.parser')

tag = soup.find("a", id="link1")

for element in tag.next_elements:

print (element)

Output

Python , <a class="lang" href="https://tutorialspoint.com/Java" id="link2">Java</a> Java and <a class="lang" href="https://tutorialspoint.com/PHP" id="link3">PHP</a> PHP ; Enhance your Programming skills. <p class="tutorial">...</p> ...

Example - Iterating Previous elements

from bs4 import BeautifulSoup

html = """

<html><head><title>TutorialsPoint</title></head>

<body>

<p class="title"><b>Online Tutorials Library</b></p>

<p class="story">TutorialsPoint has an excellent collection of tutorials on:

<a href="https://tutorialspoint.com/Python" class="lang" id="link1">Python</a>,

<a href="https://tutorialspoint.com/Java" class="lang" id="link2">Java</a> and

<a href="https://tutorialspoint.com/PHP" class="lang" id="link3">PHP</a>;

Enhance your Programming skills.</p>

<p class="tutorial">...</p>

"""

soup = BeautifulSoup(html, 'html.parser')

tag = soup.find("body")

for element in tag.previous_elements:

print (element)

Output

<html><head><title>TutorialsPoint</title></head>

Beautiful Soup - Modifying Tree

One of the powerful features of Beautiful Soup library is to be able to be able to manipulate the parsed HTML or XML document and modify its contents.

Beautiful Soup library has different functions to perform the following operations −

Add contents or a new tag to an existing tag of the document

Insert contents before or after an existing tag or string

Clear the contents of an already existing tag

Modify the contents of a tag element

Add content

You can add to the content of an existing tag by using append() method on a Tag object. It works like the append() method of Python's list object.

In the following example, the HTML script has a <p> tag. With append(), additional text is appended.

Example - Adding Content to P Tag

from bs4 import BeautifulSoup

markup = '<p>Hello</p>'

soup = BeautifulSoup(markup, 'html.parser')

print (soup)

tag = soup.p

tag.append(" World")

print (soup)

Output

<p>Hello</p> <p>Hello World</p>

With the append() method, you can add a new tag at the end of an existing tag. First create a new Tag object with new_tag() method and then pass it to the append() method.

Example - Appending tag

from bs4 import BeautifulSoup, Tag

markup = '<b>Hello</b>'

soup = BeautifulSoup(markup, 'html.parser')

tag = soup.b

tag1 = soup.new_tag('i')

tag1.string = 'World'

tag.append(tag1)

print (soup.prettify())

Output

<b>

Hello

<i>

World

</i>

</b>

If you have to add a string to the document, you can append a NavigableString object.

Example - Appending a String

from bs4 import BeautifulSoup, NavigableString

markup = '<b>Hello</b>'

soup = BeautifulSoup(markup, 'html.parser')

tag = soup.b

new_string = NavigableString(" World")

tag.append(new_string)

print (soup.prettify())

Output

<b> Hello World </b>

From Beautiful Soup version 4.7 onwards, the extend() method has been added to Tag class. It adds all the elements in a list to the tag.

Example - Adding multiple elements

from bs4 import BeautifulSoup markup = '<b>Hello</b>' soup = BeautifulSoup(markup, 'html.parser') tag = soup.b vals = ['World.', 'Welcome to ', 'TutorialsPoint'] tag.extend(vals) print (soup.prettify())

Output

<b> Hello World. Welcome to TutorialsPoint </b>

Insert Contents

Instead of adding a new element at the end, you can use insert() method to add an element at the given position in a the list of children of a Tag element. The insert() method in Beautiful Soup behaves similar to insert() on a Python list object.

In the following example, a new string is added to the <b> tag at position 1. The resultant parsed document shows the result.

Example - Inserting a String

from bs4 import BeautifulSoup, NavigableString markup = '<b>Excellent </b><u>from TutorialsPoint</u>' soup = BeautifulSoup(markup, 'html.parser') tag = soup.b tag.insert(1, "Tutorial ") print (soup.prettify())

Output

<b> Excellent Tutorial </b> <u> from TutorialsPoint </u>

Beautiful Soup also has insert_before() and insert_after() methods. Their respective purpose is to insert a tag or a string before or after a given Tag object. The following code shows that a string "Python Tutorial" is added after the <b> tag.

Example - Inserting After string

from bs4 import BeautifulSoup, NavigableString

markup = '<b>Excellent </b><u>from TutorialsPoint</u>'

soup = BeautifulSoup(markup, 'html.parser')

tag = soup.b

tag.insert_after("Python Tutorial")

print (soup.prettify())

Output

<b> Excellent </b> Python Tutorial <u> from TutorialsPoint </u>

On the other hand, insert_before() method is used below, to add "Here is an " text before the <b> tag.

from bs4 import BeautifulSoup, NavigableString

markup = '<b>Excellent </b><u>from TutorialsPoint</u>'

soup = BeautifulSoup(markup, 'html.parser')

tag = soup.b

tag.insert_before("Here is an ")

print (soup.prettify())

Output

Here is an <b> Excellent </b> Python Tutorial <u> from TutorialsPoint </u>

Clear the Contents

Beautiful Soup provides more than one ways to remove contents of an element from the document tree. Each of these methods has its unique features.

The clear() method is the most straight-forward. It simply removes the contents of a specified Tag element. Following example shows its usage.

Example - Removing content of a Tag

from bs4 import BeautifulSoup, NavigableString

markup = '<b>Excellent </b><u>from TutorialsPoint</u>'

soup = BeautifulSoup(markup, 'html.parser')

tag = soup.find('u')

tag.clear()

print (soup.prettify())

Output

<b> Excellent </b> <u> </u>

It can be seen that the clear() method removes the contents, keeping the tag intact.

Here is the Python code using clear() method

Example - Usage of clear() method

from bs4 import BeautifulSoup

html = """

<html>

<body>

<p> The quick, brown fox jumps over a lazy dog.</p>

<p> DJs flock by when MTV ax quiz prog.</p>

<p> Junk MTV quiz graced by fox whelps.</p>

<p> Bawds jog, flick quartz, vex nymphs./p>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

tags = soup.find_all()

for tag in tags:

tag.clear()

print (soup.prettify())

Output

<html> </html>

The extract() method removes either a tag or a string from the document tree, and returns the object that was removed.

Example

from bs4 import BeautifulSoup

html = """

<html>

<body>

<p> The quick, brown fox jumps over a lazy dog.</p>

<p> DJs flock by when MTV ax quiz prog.</p>

<p> Junk MTV quiz graced by fox whelps.</p>

<p> Bawds jog, flick quartz, vex nymphs./p>

</body>

</html>

"""

soup = BeautifulSoup(html, 'html.parser')

tags = soup.find_all()

for tag in tags:

obj = tag.extract()

print ("Extracted:",obj)

print (soup)

Output

Extracted: <html> <body> <p> The quick, brown fox jumps over a lazy dog.</p> <p> DJs flock by when MTV ax quiz prog.</p> <p> Junk MTV quiz graced by fox whelps.</p> <p> Bawds jog, flick quartz, vex nymphs.</p> </body> </html> Extracted: <body> <p> The quick, brown fox jumps over a lazy dog.</p> <p> DJs flock by when MTV ax quiz prog.</p> <p> Junk MTV quiz graced by fox whelps.</p> <p> Bawds jog, flick quartz, vex nymphs.</p> </body> Extracted: <p> The quick, brown fox jumps over a lazy dog.</p> Extracted: <p> DJs flock by when MTV ax quiz prog.</p> Extracted: <p> Junk MTV quiz graced by fox whelps.</p> Extracted: <p> Bawds jog, flick quartz, vex nymphs.</p>

You can extract either a tag or a string. The following example shows a tag being extracted.

Example - Extracting a tag

html = '''

<ol id="HR">

<li>Rani</li>

<li>Ankita</li>

</ol>

'''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'html.parser')

obj=soup.find('ol')

obj.find_next().extract()

print (soup)

Output

<ol id="HR"> <li>Ankita</li> </ol>

Change the extract() statement to remove inner text of first <li> element.

Example - Extract first element

html = '''

<ol id="HR">

<li>Rani</li>

<li>Ankita</li>

</ol>

'''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'html.parser')

obj=soup.find('ol')

obj.find_next().string.extract()

print (soup)

Output

<ol id="HR"> <li>Ankita</li> </ol>

There is another method decompose() that removes a tag from the tree, then completely destroys it and its contents −

Example - Removing a tag from tree

html = '''

<ol id="HR">

<li>Rani</li>

<li>Ankita</li>

</ol>

'''

from bs4 import BeautifulSoup

soup = BeautifulSoup(html, 'html.parser')

tag1=soup.find('ol')

tag2 = soup.find('li')

tag2.decompose()

print (soup)

print (tag2.decomposed)

Output

<ol id="HR"> <li>Ankita</li> </ol>

The decomposed property returns True or False - whether an element has been decomposed or not.

Modify the Contents

We shall look at the replace_with() method that allows contents of a tag to be replaced.

Just as a Python string, which is immutable, the NavigableString also can't be modified in place. However, use replace_with() to replace the inner string of a tag with another.

Example - Replace Tag Content

from bs4 import BeautifulSoup

soup = BeautifulSoup("<h2 id='message'>Hello, Tutorialspoint!</h2>",'html.parser')

tag = soup.h2

tag.string.replace_with("OnLine Tutorials Library")

print (tag.string)

Output

OnLine Tutorials Library

Here is another example to show the use of replace_with(). Two parsed documents can be combined if you pass a BeautifulSoup object as an argument to a certain function such as replace_with().2524

Example - Usage of replace_with() method

from bs4 import BeautifulSoup

obj1 = BeautifulSoup("<book><title>Python</title></book>", features="xml")

obj2 = BeautifulSoup("<b>Beautiful Soup parser</b>", "lxml")

obj2.find('b').replace_with(obj1)

print (obj2)

Output

<html><body><book><title>Python</title></book></body></html>

The wrap() method wraps an element in the tag you specify. It returns the new wrapper.

from bs4 import BeautifulSoup

soup = BeautifulSoup("<p>Hello Python</p>", 'html.parser')

tag = soup.p

newtag = soup.new_tag('b')

tag.string.wrap(newtag)

print (soup)

Output

<p><b>Hello Python</b></p>

On the other hand, the unwrap() method replaces a tag with whatever's inside that tag. It's good for stripping out markup.

Example - Usage of unwrap() method