- Computer Concepts - Home

- Introduction to Computer

- Introduction to GUI based OS

- Elements of Word Processing

- Spread Sheet

- Introduction to Internet, WWW, Browsers

- Communication & Collaboration

- Application of Presentations

- Application of Digital Financial Services

- Computer Concepts Resources

- Computer Concepts - Quick Guide

- Computer Concepts - Useful Resources

- Computer Concepts - Discussion

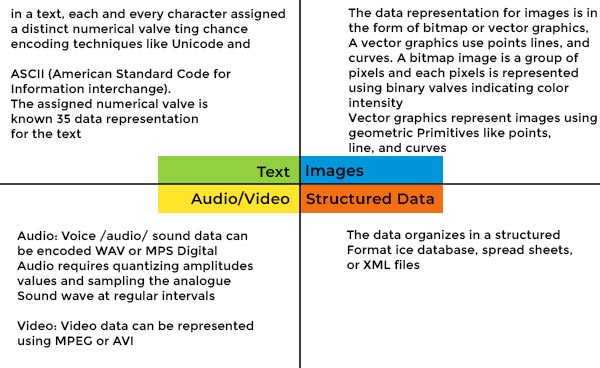

Representation of Data/Information

Computers do not understand human language; they understand data within the prescribed form. Data representation is a method to represent data and encode it in a computer system. Generally, a user inputs numbers, text, images, audio, and video etc types of data to process but the computer converts this data to machine language first and then processes it.

Some Common Data Representation Methods Include

Data representation plays a vital role in storing, process, and data communication. A correct and effective data representation method impacts data processing performance and system compatibility.

Computers represent data in the following forms

Number System

A computer system considers numbers as data; it includes integers, decimals, and complex numbers. All the inputted numbers are represented in binary formats like 0 and 1. A number system is categorized into four types −

- Binary − A binary number system is a base of all the numbers considered for data representation in the digital system. A binary number system consists of only two values, either 0 or 1; so its base is 2. It can be represented to the external world as (10110010)2. A computer system uses binary digits (0s and 1s) to represent data internally.

- Octal − The octal number system represents values in 8 digits. It consists of digits 0,12,3,4,5,6, and 7; so its base is 8. It can be represented to the external world as (324017)8.

- Decimal − Decimal number system represents values in 10 digits. It consists of digits 0, 12, 3, 4, 5, 6, 7, 8, and 9; so its base is 10. It can be represented to the external world as (875629)10.

- Hexadecimal number − Hexadecimal number system represents values in 16 digits. It consists of digits 0, 12, 3, 4, 5, 6, 7, 8, and 9 then it includes alphabets A, B, C, D, E, and F; so its base is 16. Where A represents 10, B represents 11, C represents 12, D represents 13, E represents 14 and F represents 15.

The below-mentioned table below summarises the data representation of the number system along with their Base and digits.

| Number System | ||

|---|---|---|

| System | Base | Digits |

| Binary | 2 | 0 1 |

| Octal | 8 | 0 1 2 3 4 5 6 7 |

| Decimal | 10 | 0 1 2 3 4 5 6 7 8 9 |

| Hexadecimal | 16 | 0 1 2 3 4 5 6 7 8 9 A B C D E F |

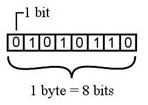

Bits and Bytes

Bits

A bit is the smallest data unit that a computer uses in computation; all the computation tasks done by the computer systems are based on bits. A bit represents a binary digit in terms of 0 or 1. The computer usually uses bits in groups. It's the basic unit of information storage and communication in digital computing.

Bytes

A group of eight bits is called a byte. Half of a byte is called a nibble; it means a group of four bits is called a nibble. A byte is a fundamental addressable unit of computer memory and storage. It can represent a single character, such as a letter, number, or symbol using encoding methods such as ASCII and Unicode.

Bytes are used to determine file sizes, storage capacity, and available memory space. A kilobyte (KB) is equal to 1,024 bytes, a megabyte (MB) is equal to 1,024 KB, and a gigabyte (GB) is equal to 1,024 MB. File size is roughly measured in KBs and availability of memory space in MBs and GBs.

The following table shows the conversion of Bits and Bytes −

| Byte Value | Bit Value |

|---|---|

| 1 Byte | 8 Bits |

| 1024 Bytes | 1 Kilobyte |

| 1024 Kilobytes | 1 Megabyte |

| 1024 Megabytes | 1 Gigabyte |

| 1024 Gigabytes | 1 Terabyte |

| 1024 Terabytes | 1 Petabyte |

| 1024 Petabytes | 1 Exabyte |

| 1024 Exabytes | 1 Zettabyte |

| 1024 Zettabytes | 1 Yottabyte |

| 1024 Yottabytes | 1 Brontobyte |

| 1024 Brontobytes | 1 Geopbytes |

Text Code

A Text Code is a static code that allows a user to insert text that others will view when they scan it. It includes alphabets, punctuation marks and other symbols. Some of the most commonly used text code systems are −

- EBCDIC

- ASCII

- Extended ASCII

- Unicode

EBCDIC

EBCDIC stands for Extended Binary Coded Decimal Interchange Code. IBM developed EBCDIC in the early 1960s and used it in their mainframe systems like System/360 and its successors. To meet commercial and data processing demands, it supports letters, numbers, punctuation marks, and special symbols. Character codes distinguish EBCDIC from other character encoding methods like ASCII. Data encoded in EBCDIC or ASCII may not be compatible with computers; to make them compatible, we need to convert with systems compatibility. EBCDIC encodes each character as an 8-bit binary code and defines 256 symbols. The below-mentioned table depicts different characters along with their EBCDIC code.

ASCII

ASCII stands for American Standard Code for Information Interchange. It is an 8-bit code that specifies character values from 0 to 127. ASCII is a standard for the Character Encoding of Numbers that assigns numerical values to represent characters, such as letters, numbers, exclamation marks and control characters used in computers and communication equipment that are using data.

ASCII originally defined 128 characters, encoded with 7 bits, allowing for 2^7 (128) potential characters. The ASCII standard specifies characters for the English alphabet (uppercase and lowercase), numerals from 0 to 9, punctuation marks, and control characters for formatting and control tasks such as line feed, carriage return, and tab.

| ASCII Tabular column | ||

|---|---|---|

| ASCII Code | Decimal Value | Character |

| 0000 0000 | 0 | Null prompt |

| 0000 0001 | 1 | Start of heading |

| 0000 0010 | 2 | Start of text |

| 0000 0011 | 3 | End of text |

| 0000 0100 | 4 | End of transmit |

| 0000 0101 | 5 | Enquiry |

| 0000 0110 | 6 | Acknowledge |

| 0000 0111 | 7 | Audible bell |

| 0000 1000 | 8 | Backspace |

| 0000 1001 | 9 | Horizontal tab |

| 0000 1010 | 10 | Line Feed |

Extended ASCII

Extended American Standard Code for Information Interchange is an 8-bit code that specifies character values from 128 to 255. Extended ASCII encompasses different character encoding normal ASCII character set, consisting of 128 characters encoded in 7 bits, some additional characters that utilise full 8 bits of a byte; there are a total of 256 potential characters.

Different extended ASCII exist, each introducing more characters beyond the conventional ASCII set. These additional characters may encompass symbols, letters, and special characters to a specific language or location.

Extended ASCII Tabular column

Unicode

It is a worldwide character standard that uses 4 to 32 bits to represent letters, numbers and symbols. Unicode is a standard character encoding which is specifically designed to provide a consistent way to represent text in nearly all of the world's writing systems. Every character is assigned a unique numeric code, program, or language. Unicode offers a wide variety of characters, including alphabets, ideographs, symbols, and emojis.

Unicode Tabular Column